- Submissions

Full Text

COJ Electronics & Communications

Method for Identification of Recycled Waste Image Based on Deep Residual Neural Network

Anle Mu*, Xudong Sun and Wenwei Zhang

School of Mechanical and Precision Instrument Engineering, Xi’an University of Technology, China

*Corresponding author:Anle Mu, School of Mechanical and Precision Instrument Engineering, Xi’an University of Technology, China

Submission: September 09, 2023;Published: October 20, 2023

ISSN 2640-9739Volume2 Issue5

Abstract

Aiming at the current problems of complex and diverse waste varieties and low profits from secondary sorting in waste sorting and recycling, an image classification and recognition method of recycled waste based on deep residual neural networks is proposed. Based on the original ResNet50 neural network structure, by reducing the size of the convolution kernel and increasing the network width, the model training time is reduced and the model learning ability is improved. Combined with the characteristics of diverse varieties and different shapes of scrap images, the improved ResNet50 neural network is used to iteratively optimize the training set and the pictures in the training set are classified into five categories: glass, paper, cardboard, plastic and metal. The experimental results show that compared with other neural network structures, the classification accuracy of the improved ResNet50 proposed in this paper reaches 95.19%, and the network degradation problem caused by deepening the network layers is also avoided.

Keywords:Recycling waste classification; Image processing; Improved ResNet50 network; Depth residual neural network

Introduction

Aiming at the current problems of environmental pollution and unfavorable recycling of renewable resources caused by incineration disposal of municipal solid waste, China accelerated the implementation of garbage classification system since July 2019, and planned to basically establish garbage classification and treatment systems in cities at or above the prefecture level across the country by the end of 2025. Although China has paid great attention to the classification and recycling of municipal solid waste, there are still many problems in the process of garbage classification and recycling, including the complexity of the types of waste involved, low profits from secondary sorting, and the lack of garbage classification knowledge among citizens. At present, the means to solve such problems in China is still manual classification, which is time consuming, inefficient and not conducive to supervision [1]. In foreign countries, the categories of garbage classification are finer and the legal system is more perfect. For example, Japan [2] classifies garbage into combustible garbage, incombustible garbage, bulky garbage, resource garbage, hazardous garbage and other garbage, and there are strict requirements on the disposal methods and disposal time for each type of garbage. With the development of science and technology, some intelligent means have been gradually adopted to replace manual classification in dealing with garbage classification problems. Image recognition and processing technology, which has always been a hot research direction for domestic and foreign scholars, is one of the important methods to realize automatic garbage classification.

At present, some foreign scholars have begun to introduce image processing and machine vision technology into garbage classification and carried out related research. For example, Wawan Setiawan et al. [3] adopted the Scale-invariant Feature Transform (SIFT) algorithm to classify organic and inorganic substances in household waste. This algorithm can maintain a relatively high recognition accuracy when the viewing angle, brightness, scale and other changes in the sample image are processed by locating feature points. However, this algorithm can only recognize relatively similar garbage.

Once the variety of garbage increases, the recognition accuracy will decrease greatly. In addition, it is difficult to manually extract different features of garbage with complex shapes by using traditional image processing methods. Therefore, the recognition rate by using traditional image recognition methods has always been low. With the arrival of the era of artificial intelligence, deep learning [4] has led to the third wave of artificial intelligence development. Since deep learning can automatically extract complex features from pictures, research on garbage classification has also shifted from traditional image processing methods to deep learning. For example, Stephenn et al. [5] used the Mobile Net neural network structure to classify common household waste into six categories. After testing, the recognition accuracy reached 87.2%, but there was still room for improvement in terms of recognition accuracy. Therefore, aiming at several common recycled waste, this paper combines deep learning and image recognition processing technology to study the image classification and recognition method of recycled waste based on deep residual neural networks, and proposes an improved ResNet50 neural network structure. The network model can automatically extract features of various waste for learning, which improves the accuracy of classification and recognition of recycled waste. Currently, the mainstream deep neural network structures widely used include Alex Net [6] proposed by Hinton et al. [7] in 2012, VGG Net and Google Net [8] proposed in 2014 to increase the depth based on Alex Net structure, Res Net [9] proposed in 2015 to solve the problem of gradient disappearance caused by increasing the depth of deep neural networks, and the lightweight networks Shuffle Net [10] and Mobile Net [11] proposed later for running AI on embedded devices. This paper adopts an improved Res Net neural network model. Compared with other models, the Res Net network structure has deeper layers (which is beneficial to improve network performance) and lower parameter volume. For the recycling of waste images with complex and diverse categories, using other network structures is prone to overfitting, while Res Net can effectively prevent overfitting due to the introduction of residual learning units. There are three mainstream structures of Res Net: ResNet50, ResNet101, and ResNet152.

The improved ResNet50 network structure proposed in this paper belongs to the 50-layer residual network structure. The 50-layer deep residual network was selected because it is simpler than the 101-layer and 152-layer structures, with faster iteration speed. According to the results of Res Net on ImageNet, the differences in Top-1 err. (Top-1 err. refers to the largest probability value representing the correct content) and Top-5 err. (Top-5 err. means one of the top 5 largest probability values represents the correct content) among the three structures are small. The training speed of ResNet50 has also been continuously improving in recent years. The latest research shows that Sony trained the ResNet50 model in just 224 seconds [12], which will most likely make it easy to transplant to embedded devices like lightweight networks in the future, which is more conducive to the application of algorithms.

Construction of deep residual neural network model based on Res Net50

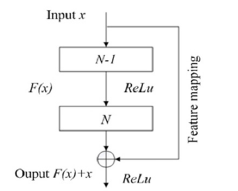

Deep residual network structure: Before the proposal of residual neural networks, experts in related fields generally believed that increasing the number of convolutional neural network layers is very effective in dealing with multi-classification and highly nonlinear problems. However, excessively deep network structures will inevitably lead to problems such as gradient disappearance (explosion), network degradation, and poor training results. To solve these problems, Res Net introduces a residual network structure [13] (Figure 1). This structure uses identity mapping to directly transmit the output of the n-1 layer to subsequent layers instead of just as input to the nth layer. This residual jump structure does not produce additional parameters, but also allows the depth of neural networks to be designed deeper, while preventing the learning accuracy from decreasing after superimposing multiple network layers.

Figure 1:Network structure of residual.

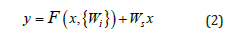

The design of the residual network structure is the key point of the entire ResNet50 network. In addition to the forward propagation in the previous deep convolutional neural networks, the structure directly transmits the input across one or more layers to the output as the initial result, and the initial result is the algebraic sum of the original input 𝑥 and the residual, F(𝑥), i.e. F(𝑥)+𝑥. Therefore, the learning goal of ResNet50 is no longer to learn a complete output, but to transform it into the difference between the initial result and the input. In this way, fitting the residual is used instead of fitting the entire output. Based on this residual network structure, we can design the depth of neural networks deeper. The residual network structure in Figure 1 can be described by the formula:

In formula (1), the dimensions of must be the same as. If not, linear projection needs to be performed first to match the dimensions of the shortcut connection and formula (1) is processed as:

For recycling waste images, even for the same type of waste, such as plastics, plastic products are dazzling, with different shapes. The neural network model used to classify and identify plastic products based on images must also have a high degree of nonlinearity, and at the same time avoid problems such as gradient explosion, gradient disappearance, and model degradation while deepening the neural network. Therefore, the residual structured neural network used in this paper to classify waste is a good method.

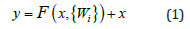

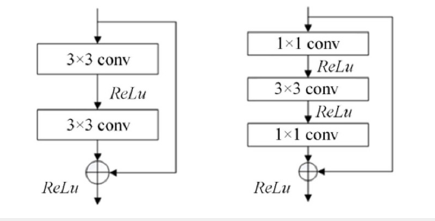

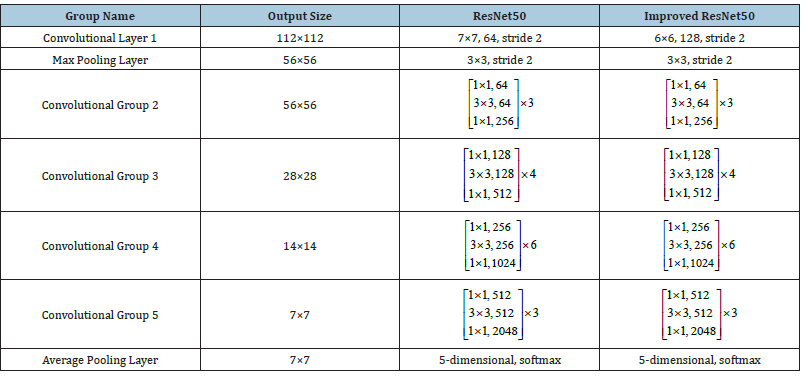

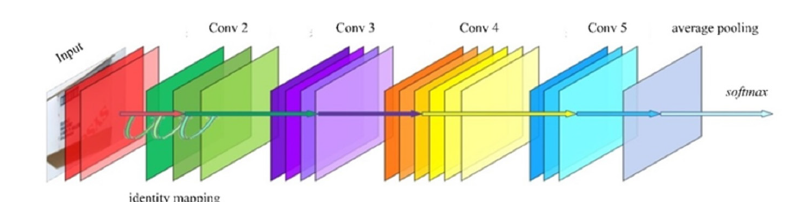

ResNet50 neural network structure: Since the 8-layer Alex Net network in 2012 to the 19-layer VGG Net in 2014, and then in the past few years, CNNs [14] have been developing towards deeper network depths. But simply increasing the number of layers of an ordinary CNN often brings many problems. In 2015, using residual network architecture, Kaiming He [15] from Microsoft Research Institute and three other Chinese trained an ultra-deep 152-layer neural network, with higher model accuracy and lower model parameters than ever before. Two types of residual network structure modules are used in the Res Net [16] network structure. The first one concatenates two 3×3 convolution layers as a residual network module, and the other concatenates three convolution layers of 1×1, 3×3, 1×1 as a residual network module, as shown in Figure 2. This module can expand or reduce the feature map dimension through the 1×1 convolution layer like Inception, so that the number of filters in the 3×3 convolution layer is not affected by the input of the previous layer, while not affecting the next layer. Among them, the ResNet50 network structure is implemented by stacking the above two residual network modules together (Table 1).

Figure 2:Two-and three-layer residual network modules.

Table 1:Res Net50 and improved Res Net50 network structure.

Optimization of Res Net 50 structure and algorithm

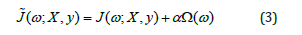

Forward propagation algorithm of Res Net: When using the Res Net network for learning, like an ordinary convolutional neural network, it also follows the principle of building a model through forward input propagation and optimizing parameters through error backpropagation. When inputting forward propagation, L2 regularization (weight decay) is considered to control the complexity of the model and solve the overfitting problem. L2 regularization adds a regularization term based on the original objective function to “punish” models with high complexity. The optimized objective function to be optimized is:

Now the minimum value of the objective function 𝑱 is required. Where 𝒙 is the training sample, y is the training sample label, ω is the weight coefficient, and the parameter α adjusts the intensity of regularization. Ω(ω) is the “penalty” term, taking the L2 norm as:

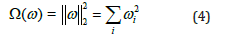

Through L2 regularization, smooth weights can be generated, minimizing the model weight coefficient ω, and restricting the size of the norm to limit the model space, thereby avoiding overfitting to some extent. In addition, in the learning process of the Res Net neural network module, only the residual is learned (fitted). Experiments have proved that compared with ordinary CNN, it can adapt to deeper network structure models and has higher learning efficiency (Figure 3).

Figure 3:ResNet50 neural network propagation structure.

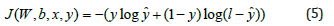

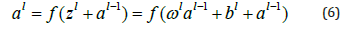

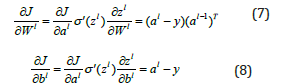

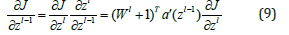

Backpropagation algorithm of Res Net: The training efficiency of residual networks will be improved in the model of deep network structures, which is related to its backpropagation algorithm. Res Net [17] takes ReLu as the activation function, which converges faster in iterations than other activation functions and does not have the problem of gradient diffusion; it takes crossentropy loss as the loss function because the logarithmic function in it considers the proximity of the prediction and is a more accurate way to calculate errors. Its expression is:

Where: y is the true label of the data, ŷ is the predicted label of the data. The process of backpropagation is to find suitable weights W and biases b to minimize the function in formula (5). We use gradient descent method [18] to find the optimal solution of the function. The output of layer 𝑙 in the network is equal to the sum of the normal output Z𝑙 of layer 𝑙 network and the input a𝑙-1 of layer 𝑙-1 network and then passed through the activation function. Starting from the propagation of errors backpropagated from layer l to layer 𝑙-1, consider a fully connected layer. The output layer 𝑙 calculation formula of Res Net can be expressed as:

Combining formula (5) and formula (6), the gradients of weight W and bias b can be obtained, see the following formulas.

Among them, the recursive process of gradient propagation between layer 𝑙 and layer 𝑙 -1 can be derived from the gradient of the previous layer 𝑙-1 using the reverse derivation formula, and the reverse calculation process is:

It can be seen from formula (9) that the product of weights W multiplication will always be greater than 1 in the residual network structure design. When the CNN network becomes deeper, the value will become smaller and smaller at this time due to the regularization of the weight W. In addition, the relationship between multi-level cascades, the change in the gradient that reaches this layer is already very small. The residual structure network will add a term 1 to the weight W, which can increase the gradient a little and make the network easier to train, so the phenomenon of model degradation will not occur.

Improved Res Net 50 network structure: In this paper, based on Res Net 50, the residual unit is improved, the size of the network convolution kernel is reduced, and the network width is increased. For recycling waste images with complex and diverse categories, reducing the size of the network convolution kernel is beneficial to reducing the amount of model parameters, while increasing the width can enable each layer of the deep residual network to learn richer features (such as texture features in different directions and frequencies). Compared with the original Res Net 50 network structure, the improved network structure model has lower computational complexity and stronger learning ability. Therefore, this paper combines the characteristics of diverse varieties and different shapes of scrap images and proposes an improved ResNet50 neural network model [19] that can be used for recycling waste classification.

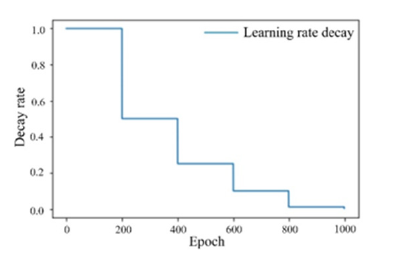

We replaced the convolution layer 1 of Res Net 50 with a relatively small 6×6, 128 channels, stride 2 convolution kernel, followed by a 3×3, stride 2 maximum pooling layer (Table 1). Compared with the original ResNet50 neural network structure, reducing kernel size can shorten the model training time and help the model parameters fit faster; increasing the network width can enable each layer of the network to learn richer features. The last fully connected layer in the network structure uses the soft max function and the rest of the convolution layers all use ReLu as the activation function. The training uses cross-entropy function as the loss (cost) function and adopts Stochastic Gradient Descent (SGD) algorithm with a batch size of 32. The initial learning rate is set to 0.002, and the learning rate decay is set as shown in Figure 4. The weight initialization of the ResNet50 network uses the He K pretrained parameters [20].

Figure 4:Learning attenuation rate of SGD algorithm.

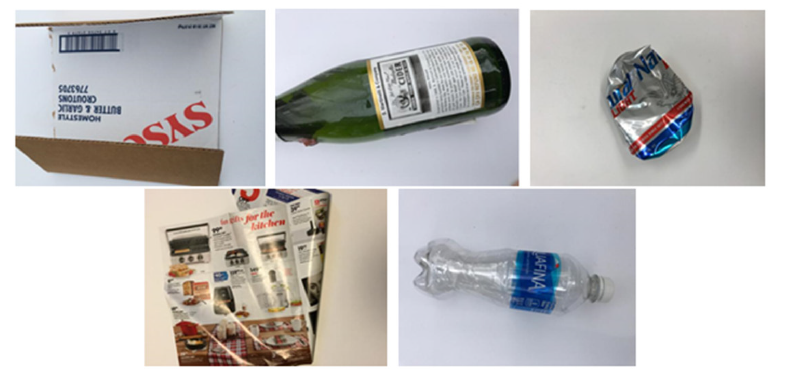

Figure 5:Dataset category image example.

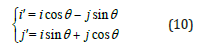

Dataset preprocessing: The main means of image preprocessing [23] for the dataset in this paper include increasing the random rotation angle of the pictures, and adjusting the brightness, color, saturation, contrast, etc. First, the pictures captured by the camera are rotated around a point in the image as the origin by a random angle. The rotation angle range is set to, to enable the model to learn pictures of waste at different angles and make the model more adaptive. The image rotation calculation formula [24] is:

Where (𝒊, 𝒋) represents the coordinates of a pixel in the original image F(𝒊, 𝒋) and represents the coordinates of the corresponding pixel in the image after mirror transformation.

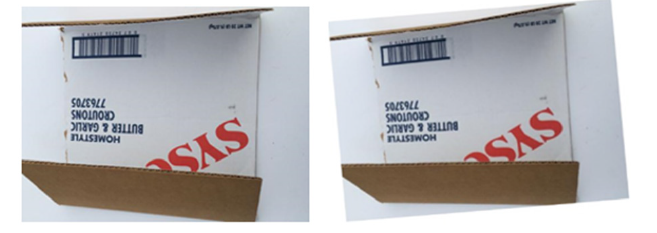

In addition, we consider that in actual situations, when using images for waste classification and identification, the quality of collected pictures cannot be guaranteed, and it cannot be guaranteed that they are collected in sufficient light (Figure 6). At this time, we should randomly adjust the brightness, contrast, color, saturation and other parameters of the images in the dataset for learning. The model trained this way has good generalization performance. In this paper, 50% of the images in all training sample sets are randomly selected for color increment adjustment: 18, contrast increment 0.5, saturation increment 0.5, brightness increments 0.125, sharpness increments 0.125, and the results are shown in Figure 7.

Figure 6:Image random rotation angle.

Figure 7:Image original image and pre-processed image.

Experiments and analysis

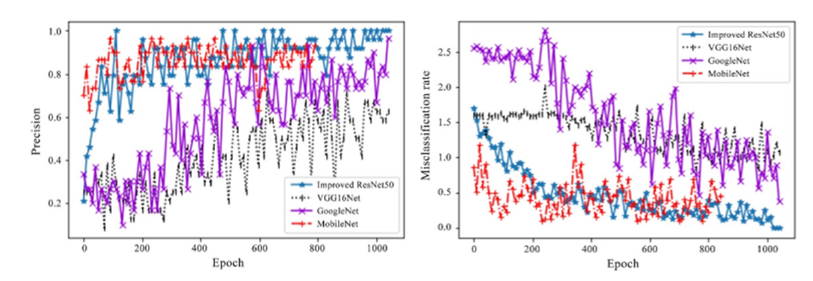

Figure 8:Improved ResNet50 network experiment results.

Experimental platform: The experimental platform [25] selected in this paper is NVIDIA’s 2017 Volta architecture 8-core GPU-Tesla V100, with 16GB video memory capacity and 32GB memory size. Compared with the improved ResNet50 model, several commonly used deep learning neural network structure models have convergence iteration accuracy and loss rate changes during training with 1434 pictures in the training set, as shown in Figure 8. Using the improved ResNet50 structure, after more than about 400 training steps, the training accuracy fluctuates between 80% and 100%; after iterating more than about 500 training steps, the entropy value of the loss rate is mostly less than 0.5. After 1000 iterations, the accuracy gradually approaches 1, and the loss rate gradually approaches 0, which also proves that the lower the cross-entropy value, the lower the loss function, and the higher the learning rate. It can be seen from the figure that the VGG16Net model converges after iterating to about 500 times, with a misclassification rate fluctuating around 1.0 and the model recognition rate is not high, only about 50%; the misclassification rate of Google Net continues to decrease as the number of iterations increases, but there is still no sign of convergence when the number of iterations reaches 1000; Mobile Net has the fastest convergence speed, with the model converging when iterating about 200 times, and the accuracy is about 85%, which is similar to the research conclusions of Rabano et al. [5]. Compared with these three neural network structures, the final recognition accuracy of the improved ResNet50 is slightly higher than Mobile Net and Google Net, and significantly higher than VGG16Net; in terms of iteration speed, the iteration speed of the improved ResNet50 model is not as fast as Mobile Net, but significantly faster than VGG16Net and Google Net.

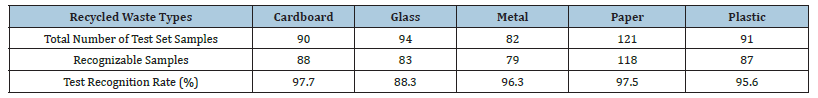

Experimental results and analysis: After training the data with the improved ResNet50 model, we tested the 478 test set pictures divided into five categories: cardboard, glass, metal, paper, and plastic. The experimental results are shown in Table 2 & 3. According to the data in Table 2, glass waste is not easy to identify, mainly because transparent glass is not clearly distinguished from the white background color, and glass bottles have a variety of colors, so it is difficult to extract glass features in the model learning process, resulting in lower recognition rate. The recognition accuracy rates of cardboard, metal, paper, and plastic are higher, all above 95%. Then, this paper compares the improved ResNet50 neural network algorithm with common neural network structures in deep learning, as shown in Table 3. The recognition rate of ResNet50 structure is significantly higher than that of other neural network structures, and the recognition rate has been increased by 1% based on the original ResNet50 structure through fine-tuning in this paper, and the training speed of the model has also been improved compared with the original ResNet50.

Table 2:Recognition accuracy of improved resnet50 network on samples.

Table 3:Comparison of recognition rates for common deep neural networks in recycling waste classification.

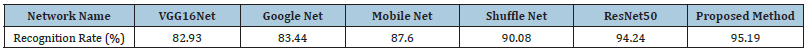

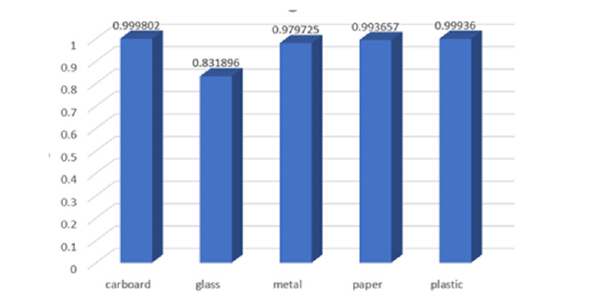

Finally, we test the model’s predicted probabilities by taking samples of recycled waste in each category from the test set. As shown in Figures 9 &10, the results show that the model’s recognition probability for glass waste is slightly lower than that of the other four categories of waste, and the model’s recognition probability for cardboard, metal, paper and plastic is close to 100%. The improved ResNet50 neural network structure has extremely strong learning ability.

Figure 9:Prediction probability of different types of garbage.

Figure 10:Predicted probability histogram for different types of garbage.

Conclusion

Aiming at the diverse varieties and different shapes of scrap images, this paper proposes an improved ResNet50 neural network model that can be used for recycling waste classification by deeply studying the forward and backward propagation principles of residual structures in depth residual networks and the basics of image enhancement algorithms. This model reduces the convolution kernel size in the convolution layer 1 based on the ResNet50 neural network structure, increases the network width, reduces the model training time and improves the recognition accuracy. The classification and recognition experiments of five common types of waste in the GitHub open-source recycling waste classification dataset show that the classification accuracy of the improved ResNet50 structure for recycling waste image classification and recognition has reached 95%, the convergence speed is faster than other network structure models (referring to VGG16Net and Google Net), and the recognition accuracy is higher (referring to Mobile Net). The improved model has a recognition probability close to 100% for cardboard, metal, paper and plastic, with high recognition accuracy and good adaptability.

Acknowledgment

We would like to express our sincere appreciation and gratitude to all individuals and organizations who have contributed to the completion of this article. We extend our thanks to the research participants, whose valuable insights and contributions were essential in conducting the study. We are also grateful to our colleagues for their support and collaboration throughout the research process. Lastly, we would like to thank the reviewers and editors for their insightful comments and suggestions, which greatly improved the quality of this article.

References

- Meng XY, Tan XC, Wang Y, Wen Z, Tao Y, et al. (2019) Investigation on decision-making mechanism of residents’ household solid waste classification and recycling behaviors. Resources, Conservation and Recycling 140: 224-234.

- Lü W, Du J (2016) Japan's garbage classification management experience and its enlightenment to China. Journal of Huazhong Normal University (Humanities and Social Sciences) 2016(1): 39-53.

- Setiawan W, Wahyudin A, Widianto GR (2017) The use of Scale Invariant Feature Transform (SIFT) algorithms to identification garbage images based on product label. 2017 3rd International Conference on Science in Information Technology, Bandung, Indonesia.

- Yu Y, Yin G, Yin Y (2014) Ray image defect recognition method based on deep learning network. Journal of Transducer Technology 2014(9): 2012-2019.

- Rabano SL, Cabatuan MK, Sybingco E (2018) Common garbage classification using mobile net. 2018 IEEE 10th International Conference on Humanoid, Baguio, Philippines.

- Krizhevsky A, Sutskever I, Hinton G (2012) ImageNet classification with deep convolutional neural networks. International Conference on Neural Information Processing Systems.

- Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Computer Science.

- Szegedy C, Liu W, Jia Y (2014) Going deeper with convolutions. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, USA.

- He KM, Zhang XY, Ren SQ, Sun J (2016) Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA.

- Zhang XY, Zhou XY, Lin MX, Sun R (2018) ShuffleNet: An extremely efficient convolutional neural network for mobile devices. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, Utah, USA.

- Howard AG, Zhu ML, Chen B, Kalenichenko D, Wang W, et al. (2017) MobileNets: Efficient convolutional neural networks for mobile vision applications. Computer Vision and Pattern Recognition.

- Mikami H, Suganuma H, U-Chupala P (2018) ImageNet/ResNet-50 training in 224 seconds. Computer Vision and Pattern Recognition.

- Guo Y, Yang W, Liu Q (2019) Research summary of residual networks. Computer Applications Research.

- Schmidhuber J (2015) Deep learning in neural networks: An overview. Neural Networks 61: 85-117.

- Ren SQ, He KM, Girshick R (2017) Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis and Machine Intelligence 39: 1137-1149.

- He KM, Zhang XY, Ren SQ (2016) Identity mappings in deep residual networks. European Conference on Computer Vision pp. 630-645.

- Liu SP, Tian GH, Xu Y (2019) A novel scene classification model combining ResNet based transfer learning and data augmentation with a filter. Neurocomputing 338: 191-206.

- Lu L, Zheng ZS, Champagne B (2019) Self-regularized nonlinear diffusion algorithm based on Levenberg gradient descent. Signal Processing 163: 107-114.

- Dai J, Tong J (2018) Galaxy morphology classification based on deep residual networks. Progress in Astronomy 4: 384-397.

- He KM, Zhang XY, Ren S Q (2015) Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification, Santiago, Chile, pp. 1026-1034.

- TensorFlow for poets.

- Uma Vetri Selvi G, Nadarajan R (2010) DICOM Image compression using bilinear interpolation. Proceedings of the 10th IEEE International Conference on Information Technology and Applications in Biomedicine, Corfu, Greece.

- Da Silva K, Kumar P, Choonara Y E (2019) Preprocessing of medical image data for three-dimensional bioprinted customized-neural-scaffolds. Tissue Engineering 25(7): 401-410.

- Zhu H (2013) Fundamentals and applications of digital image processing. Tsinghua University Press, Beijing, China.

- Bao J, Ye B, Wang X (2019) Research on eddy current testing image classification of titanium plates based on SSDAE Deep neural network. Chinese Journal of Scientific Instrument 4: 238-247.

© 2023 Anle Mu. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and build upon your work non-commercially.

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

.jpg)

Editorial Board Registrations

Editorial Board Registrations Submit your Article

Submit your Article Refer a Friend

Refer a Friend Advertise With Us

Advertise With Us

.jpg)

.jpg)

.bmp)

.jpg)

.png)

.jpg)

.jpg)

.png)

.png)

.png)