- Submissions

Full Text

Open Access Biostatistics & Bioinformatic

An RGB-D Camera-based Travel Aid for the Blind

Mu-Chun Su* and Wei-Jen Lin

Department of Computer Science & Information Engineering, National Central University, Taiwan

*Corresponding author: Mu-Chun Su, Department of Computer Science & Information Engineering, National Central University, Taiwan

Submission: April 23, 2018;Published: April 30, 2018

ISSN: 2578-0247

Volume1 Issue4

Abstract

In this paper, we present the application of an RGB-D camera in developing a travel aid for the blind or visually impaired people. The goal of the proposed travel aid is to provide the user with information about obstacles along the travelled path so he or she may walk safely and quickly in an unfamiliar environment. The performance of the travel aid greatly depends on whether the ground plane can be successfully detected. In this paper, we present a new approach to detecting the ground plane from a depth image. Two different experiments were designed to test the performance of the proposed travel aid.

Keywords: Travel aid; The blind; The visually impaired people; Assistive technology

Introduction

Without the mobility capability, no can have an independent life. The blind or the visually impaired people greatly suffer from the independent mobility problem; therefore, the low quality of their life far exceeds our imagination. Nowadays, most of the blind persons still rely on the use of white canes. Only the minority of the blind depends on guide dogs because the training fee and the breeding fee for a fully trained guide dog is usually not affordable to every blind persons. While the white cane has many appealing properties (e.g., light weight, small size, and low cost), its main problem is that it cannot detect obstacles beyond its reach of 1-2m. In addition, a white cane cannot actively point out which way the cane holder may walk forward or remind in advance him or her of some obstacles in front of him or her.

Fortunately, with the advance of the modern information and communication technology (ICT), many different kinds of electronic travel aids (ETAs) have been developed and some of them were even commercialized. The goal of ETAs is to help the blind people perceive environments surrounding them. While some ETAs are based on the use of sensors (e.g., ultrasonic sensors, laser beams, visions), some of them are based on robotics [1-18]. For example, a dynamic ultrasonic ranging system as a mobility aid was introduced by Gao & Li [1] and a DSP-based ultrasonic navigation aid was proposed by Mihajlik et al. [5]. The Guide Cane based on mobile robot technologies was developed by Ulrich & Borenstein [6] and a mobile robotic base with an RFID reader and a laser range finder was introduced by Kulyukin et al. [9]. A vision-based travel aid was discussed by Su et al. [15]. A portable indoor localization aid incorporated with a laser scanner was introduced by Hesch & Roumeliotis [16]. A co-robotic cane which uses a 3-D camera was developed by Ye et al. [17]. Each has its own merits, limitations, and disadvantages. For example, the ultrasonic-based ETAs require the user to actively scan the environment to detect obstacles. The use of a robot as a navigation aid may encounter the mobility restriction problems (e.g., the stairs, tight spaces). Some ETAs suffer from the high-price problem.

Based on the aforementioned discussions, we propose an RGB-D camera-based travel aid to complement the use of a white cane. The proposed aid will greatly expand the environmental detection range of the blind person with a white cane. The prototype of the travel aid consists of a Kinect sensor, a portable computer, and an ear phone. The goal of the travel aid is to provide the user with the information for way finding. When a blind person is in an unfamiliar place, if the user can quickly get the information on the distances and orientations of obstacles in front of him or her then he or she can have more confidence in walking through the place via a white cane. The information about the obstacles will be output to the user via the earphone. The remainder of the paper is organized as follows. Section II presents the RGB-D camera-based travel aid for the blind. Section III describes the simulation results. Section IV gives the conclusions.

The RGB-D Camera-Based Travel Aid

The proposed RGB-D camera-based travel aid involves the following four stages.

Step 1: Image acquisition

The Kinect sensor acquires a depth image of the environment in front of the user.

Step 2: Ground plane detection

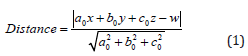

The proposed travel aid greatly depends on the performance of the detection of ground plane. If we can successfully detect the ground plane from the image then the foreground (e.g., the obstacles, walls, etc.) can be correctly segmented. Recently, the RANSAC plane fitting [19] and the V-disparity image are two possible choices for detecting the ground plane. Each approach has its merits and limitations. In our approach, we fully utilize the information about the ground plane, ax+by+cz=w, provided by the Kinect SDK. The basic idea is the computation of the distance between a point,(a0,b0,c0) , and a 3-D plane, ax + by + cz = w,as follows:

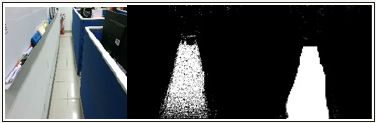

If the distance between a point and the ground plane is below a pre-specified threshold then this point can be declared to be a point belonging to the ground plane. Although this method is very straightforward, its performance is not high enough to be the only one criterion because the depth image is sometime noisy. Therefore, we use another criterion to further verify whether a point belongs to the ground plane. The basic idea is as follows. Let A denote the point, (a0,b0,c0),B denote its neighboring point, (a1,b1,c1),and C denote it’s another neighboring point, (a2,b2,c2).We then get two vectors,AB= (a1 − a0,b1−b0,c1−c0) ĀB̄ and 2 0 12 0 2 0 AC = (a − a , b − b , c − c ) . Via the cross product operator, we can find the vector AB × AC which is perpendicular to the two vectors, AB and AC . If the angle between this vector AB × AC and the normal vector of the ground plane provided by the Kinect SDK is smaller than a pre-specified threshold, then the point, 0 0 0 (a , b , c ) , will be declared to be a point belonging to the ground plane. Figure 1 shows an example of the detection of the ground plane. Points do not belong to the detected ground plane are denoted as black points in Figure 1a. We still need to use two morphological operators (i.e., the erosion and dilation operators) to process Figure 1b to make the detected ground plane more smooth and consistent as shown in Figure 1c.

Figure 1: The detection of the ground plane (a) the original color image (b) The detected ground planes (c) The smootherned ground plane.

Step 3: Obstacle detection

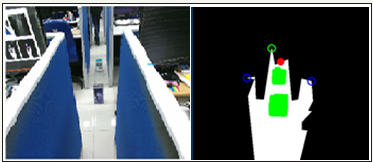

In this step, we need to detect obstacles lying on the ground plane. The basic idea is as follows. We concern how far the user can reach. We use a morphological operator to fill out the holes inside the ground plane and then the locations of the holes will be the locations of the obstacles in front of the user. Figure 2 shows an example of the detection of obstacles.

Figure 2: The detection of obstacle (a) The original color image (b) The detected obstacles in green color, the ground plane in white color, and the background in black color.

Step 4: Audio signaling

The information about the detected objects (e.g., the distance between the obstacles and the user, the orientation of the obstacles, etc.) will be output to the user via the earphone.

The Simulations

Experiment one: The detection of the ground plane

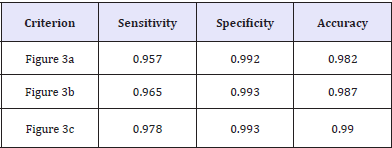

In this experiment, we want to test the performance of our ground plane detection. Three different images with the ground truth information were collected as shown in Figure 3. Table 1 shows the performance of the proposed ground plane detection method described in step 2. It tells us that the proposed ground plane detection method can achieve high performance.

Figure 3: Three different environment images for the test of the performance of the ground plane detection method.

Table 1: The performance of the ground plane detection method described in step 2.

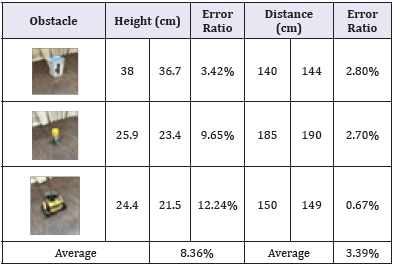

Experiment two: The detection of the obstacles

In this experiment, we want to test whether the information (i.e., the height of the obstacles and the distance between the obstacle and the user) about the detected obstacles is correct. In this experiment, we have tested 10 different obstacles. Table 2 shows the results. It tells us that the information provided by the aid is useful since the average error ratios are 8.36% and 3.39%, respectively.

Table 2: The performance of the detection of obstacles.

Conclusion

In this paper, we explore the possibility to applying an RGB-D camera in developing a vision-based travel aid for the blind or visually impaired people. A special ground plane detection method is developed. Based on the detected ground plane, we then can effectively detect obstacles in front of the user and provide the user with the information about the obstacles. We demonstrate that this idea, if efficiently and effectively implemented, can be really helpful to them.

Acknowledgement

This work was supported by the Ministry of Science and Technology, Taiwan, R.O.C, under 106-2221-E-008 -092, 106-2634- F-038 -002, and 107-2634-F-008-001.

References

- Gao RX, Li C (1995) A dynamic ultrasonic ranging system as a mobility aid for the blind. 17th Annual International Conference of the IEEE Engineering in Medicine and Biology Society 2: 1631-1632.

- Shoval S, Borenstein J, Koren Y (1998) Auditory guidance with the Nav Belt-a computerized travel aid for the blind based on mobile robotic technology. IEEE Trans on Biomedical Engineering 45: 1376-1386.

- Kang SJ, Kim YH, Moon IH (2001) Development of an intelligent guide-stick for the blind. Proceedings of the 2001 IEEE International Conference on Robotics & Automation 4: 3208-3213.

- Debonath N, Hailani ZA, Jamaludin S (2001) An electronically guide walking stick for the blind. 2001 Proceedings of the 23rd Annual EMBS International Conference 2: 1377-1379.

- Mihajlik P, Guttermuth M, Seres K, Tatai P (2001) DSP-based ultrasonic navigation aid for the blind. IEEE Instrumentation and Measurement Technology Conference 3: 1535-1540.

- Ulrich, Borenstein J (2001) The Guide Cane: Applying mobile robot technologies to assist the visually impaired. IEEE Trans Syst Man Cybern A Syst and Humans 31(2): 131-136.

- Ceranka S, Niedzwiecki M (2003) Application of particle filtering in navigation system for blind. 2003 Proceedings 7th International Symposium on Signal Processing and Its Applications 2: 495-498.

- Wong F, Nagarajan F, Yaacob S (2003) Application of stereo vision in a navigation aid for blind people. Proceedings of the Joint Conference of the International Conference on Information, Communications and Signal Processing and the Pacific-Rim Conference on Multimedia, Singapore, pp. 734-737.

- Kulyukin V, Gharpure C, Sute P, DeGraw N, Nicholson J (2004) A robotic way finding system for the visually impaired. In the 16th conference on Innovative applications of artificial intelligence, pp. 864-869.

- Yuan D, Manduchi R (2005) Dynamic environment exploration using a virtual white cane. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 243-249.

- Galindo C, Gonzalez J, Fernandez MJA (2006) Control architecture for human-robot integration: Application to a robotic wheelchair. IEEE Trans Syst Man Cybern B Cybern 36(5): 1053-1067.

- Kulyukin V, Gharpure C, Nicholson J, Osborne G (2006) Robot-assisted way finding for the visually impaired in structured indoor environments. Autonomous Robots 21(1): 29-41.

- Hesch JA, Roumeliotis SI (2007) An indoor navigation aid for the visually impaired. Proceedings of the IEEE International Conference on Robotics and Automation, pp. 3545-3551.

- Giudice NA, Legge GE (2008) Blind navigation and the role of technology. In: Helal A, Mokhtari M, Abdulrazak B (Eds.), The Engineering Handbook of Smart Technology for Aging, Disability and Independence (Computer Engineering Series), John Wiley & Sons, New York, USA.

- Su MC, Hsieh YZ, Huang DY, Zhao YX, Sun CC (2008) A vision-based travel aid for the blind. In: Zoeller EA (Ed.), Pattern Recognition Theory and Application, Nova Science Publishers, New York, USA, pp. 73-89.

- Hesch JA, Roumeliotis SI (2010) Design and analysis of a portable indoor localization aid for the visually impaired. Int J Robotics Res 29(11): 1400-1415.

- Ye C, Hong S, Qian X (2016) A co-robotic cane: a new robotic navigation aid for blind navigation. IEEE Systems Man & Cybernetics Magazine 2(2): 33-42.

- Fischler M, Bolles R (1981) Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Communications of the ACM 24(6): 381-395.

- Rebut J, Toulminet G, Bensrhair A (2004) Road obstacles detection using a self-adaptive stereo vision sensor: A contribution to the ARCOS French project. In IEEE Intelligent Vehicles Symposium, pp. 738-743.

© 2018 Mu-Chun Su. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and build upon your work non-commercially.

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

.jpg)

Editorial Board Registrations

Editorial Board Registrations Submit your Article

Submit your Article Refer a Friend

Refer a Friend Advertise With Us

Advertise With Us

.jpg)

.jpg)

.bmp)

.jpg)

.png)

.jpg)

.jpg)

.png)

.png)

.png)