- Submissions

Full Text

Novel Research in Sciences

Electronic Health Records and Teaching Status Effects on Mortality in New York State Hospitals

Daniel O’Malley and Eduard Babulak*

Institute of Technology and Business in Ceske Budejovice, Czech Republic

*Corresponding author:Eduard Babulak, Institute of Technology and Business in Ceske Budejovice, Czech Republic

Submission: September 05, 2019; Published: September 11,2019

.jpg)

Volume2 Issue1September 09,2019

Abstract

Becoming more efficient and increasing the quality of healthcare delivery has been a recurring theme in the U.S. for decades. Recently, electronic health records have been touted as a technology-centric path toward healthcare efficiency and quality, with the Federal government incentivizing large-scale adoption of these systems. The purpose of this quantitative correlational study was to examine the quality differences between teaching and non-teaching hospitals in the post-electronic health record implementation landscape, specifically looking at a possible interaction effect between electronic health records and hospital teaching status. The results of this study showed pneumonia mortality was slightly higher at teaching hospitals, despite the rate being risk-adjusted. The most salient finding of this study was that electronic health records seem to have no effect on mortality in New York State hospitals. There was also no detected interaction effect between electronic health record status and the teaching status of New York State hospitals.

Introduction

The differences between teaching and non-teaching hospitals have been studied in previous decades with a keen focus on the cost of care and the efficiency of these two different types of provider organizations [1-4]. In these previous studies, teaching hospitals were generally found to be more expensive (between 10% to 30%), and less efficient than their non-teaching counterparts [2,4]. Since these studies were completed, a wave of information technology adoption has swept through healthcare in the United States with the passing of two influential laws-the Health Information Technology for Economic and Clinical Health (HITECH) Act of 2009, and the Patient Protection and Affordable Care Act (PPACA) of 2010 [5,6].

The HITECH Act provided $27 billion dollars in incentives to bring about a greater use of technology in healthcare, and specifically the increased use of electronic health records, or EHRs [6]. The United States stands out among developed nations for the amount of money spent on healthcare services for its citizens [7]. For example, the U.S. spends roughly 17% of its GDP on health services. EHRs have been touted as a potential cost-saving measure along with the potential to increase organizational efficiency, and there is some research to support this viewpoint [8,9].

Studies performed after the passing of these two instrumental pieces of legislation that examined the differences between teaching hospitals and non-teaching hospitals have largely focused on specific patient conditions or sub-populations and have not looked at teaching hospitals in the light of organization-level attributes or performance post-EHR implementation [10-13]. When examining the effects electronic health records and clinical information systems have on patient care, recent studies show mixed results. Some of the studies found a benefit to patient care via the reduction of readmissions, lower mortality, and superior quality outcomes [14-16]. While others show mixed results, where there was either no detected effect [17-20] or a trade-off between lower mortality and greater readmissions [21]. With the large-scale implementation of EHRs in all types of hospitals in recent years, the organization-level quality differences between teaching and non-teaching hospitals should be re-examined with an eye toward any potential interaction between these EHRs and teaching status. A potential interaction effect between EHR presence and teaching status has not previously been studied.

Purpose of the Study

The purpose of this quantitative correlational study was to examine the quality performance differences between teaching and non-teaching hospitals in the post-EHR implementation landscape, specifically looking at a possible interaction effect between EHRs and hospital teaching status. This study will also include analyses that may help to confirm or disconfirm past research findings regarding the quality differences between teaching and nonteaching hospitals as well as potential quality differences after the implementation of EHRs. This knowledge may help to establish whether EHRs have made a meaningful impact on the quality of care in teaching hospitals.

The current analysis around EHRs, particularly with respect to their application within teaching hospitals, still has several unexplored frontiers. The United States is firmly on its journey of EHR adoption and optimization. Some research remains relatively ambiguous and conflicting when it comes to the larger picture of how EHRs affect hospitals [16,18,19]. Teaching hospitals, and the potential effects of EHR implementations on those organizations, have largely not been studied at organizational levels in the posthealthcare reform landscape. This study also adds insight in this area of research.

Theoretical Framework

The research outlined in this study is focused to address the questions of quality associated with teaching hospitals as they have transitioned through the HITECH Act and PPACA era, post-EHR implementation. One of the potential research gaps in the existing literature appears to be that teaching hospitals are largely not studied using organization-level variables after their EHR implementation and the bulk of studies are focused on sub-populations of patients as opposed to focused on organizational performance or includes data that is largely pre-EHR implementation [10,22,23].

There are two key theories that form the foundation of this research. The theories explored in this section are largely divided by the two dimensions of the problem statement. The first dimension relates to the use of EHRs within organizations and how those information systems may contribute to an organization’s efficiency and quality. The second dimension relates to how teaching hospitals have historically compared to non-teaching hospitals, by quality measures. The intersection of these two areas is the focus of this research-have EHRs affected teaching hospitals in the area of healthcare quality differently than non-teaching hospitals?

Within the literature surrounding EHR implementations, most research articles do not explicitly link to a particular theory, either within the information systems research domain or other domains, but some do [16]. For example, Sharma et al. [16] linked to Organizational Information Processing Theory [24] and note that these types of information systems can integrate various departments within an organization for the benefit of patient care. Other researchers also link to various quality improvement theories, namely those proposed by Deming and Crosby [15]. These quality improvement theories are primarily focused on quality and efficiency of organizations and do not explicitly link to the implementation of information systems, such as EHRs.

Within the literature surrounding the analysis of teaching versus non-teaching hospitals, in similar fashion, most researchers do not explicitly link to a particular foundational theory when comparing these hospitals, but there are some links to theory [3]. For example, Rosko 3] linked to Institutional Theory [25] when describing how hospitals determine what amount of uncompensated care to carry.

Research questions

These research questions will add to the body of knowledge in the post-EHR domain and potentially may help to clarify existing conflicting findings of past research by investigating several different dimensions of hospital quality performance with respect to EHR adoption and hospital teaching status.

I. RQ1. How much of an effect do EHRs have on hospital mortality rate as measured by AMI mortality risk adjusted rate?

II. RQ2. How much of an effect do EHRs have on hospital mortality rate as measured by heart failure mortality risk adjusted rate?

III. RQ3. How much of an effect do EHRs have on hospital mortality rate as measured by pneumonia mortality risk adjusted rate?

IV. RQ4. How much of an effect does teaching status have on hospital mortality rate as measured by AMI mortality risk adjusted rate?

V. RQ5. How much of an effect does teaching status have on hospital mortality rate as measured by heart failure mortality risk adjusted rate?

VI. RQ6. How much of an effect does teaching status have on hospital mortality rate as measured by pneumonia mortality risk adjusted rate?

VII. RQ7. How do major and minor teaching (to include nonteaching) hospitals’ mortality rate compare with and without an EHR as measured by AMI mortality risk adjusted rate?

VIII. RQ8. How do major and minor teaching (to include nonteaching) hospitals’ mortality rate compare with and without an EHR as measured by heart failure mortality risk adjusted rate?

IX. RQ9. How do major and minor teaching (to include nonteaching) hospitals’ mortality rate compare with and without an EHR as measured by pneumonia mortality risk adjusted rate?

Hypotheses

The hypotheses for this research are listed below. These specific hypotheses are directly related to each of the research questions in the previous section.

I. H10. EHRs do not have a statistically significant effect on hospital mortality rate as measured by AMI mortality risk adjusted rate.

II. H1a. EHRs have a statistically significant effect on hospital mortality as measured by AMI mortality risk adjusted rate.

III. H20. EHRs do not have a statistically significant effect on hospital mortality rate as measured by heart failure mortality risk adjusted rate.

IV. H2a. EHRs have a statistically significant effect on hospital mortality as measured by heart failure mortality risk adjusted rate.

V. H30. EHRs do not have a statistically significant effect on hospital mortality rate as measured by pneumonia mortality risk adjusted rate.

VI. H3a. EHRs have a statistically significant effect on hospital mortality as measured by pneumonia mortality risk adjusted rate.

VII. H40. Teaching status does not have a statistically significant effect on hospital mortality rate as measured by AMI mortality risk adjusted rate.

VIII. H4a. Teaching status does have a statistically significant effect on hospital mortality as measured by AMI mortality risk adjusted rate.

IX. H50. Teaching status does not have a statistically significant effect on hospital mortality rate as measured by heart failure mortality risk adjusted rate.

X. H5a. Teaching status does have a statistically significant effect on hospital mortality as measured by heart failure mortality risk adjusted rate.

XI. H60. Teaching status does not have a statistically significant effect on hospital mortality rate as measured by pneumonia mortality risk adjusted rate.

XII. H6a. Teaching status does have a statistically significant effect on hospital mortality as measured by pneumonia mortality risk adjusted rate.

XIII. H70. There is not a statistically significant difference between major and minor teaching (to include non-teaching) hospitals’ mortality rate with and without an EHR as measured by AMI mortality risk adjusted rate.

XIV. H7a. There is a statistically significant difference between major and minor teaching (to include non-teaching) hospitals’ mortality with and without an EHR as measured by AMI mortality risk adjusted rate.

XV. H80. There is not a statistically significant difference between major and minor teaching (to include non-teaching) hospitals’ mortality rate with and without an EHR as measured by heart failure mortality risk adjusted rate.

XVI. H8a. There is a statistically significant difference between major and minor teaching (to include non-teaching) hospitals’ mortality with and without an EHR as measured by heart failure mortality risk adjusted rate.

XVII. H90. There is not a statistically significant difference between major and minor teaching (to include non-teaching) hospitals’ mortality rate with and without an EHR as measured by pneumonia mortality risk adjusted rate.

XVIII. H9a. There is a statistically significant difference between major and minor teaching (to include non-teaching) hospitals’ mortality with and without an EHR as measured by pneumonia mortality risk adjusted rate.

Research Methods and Design

The research method and design for this study incorporated a two-way multivariate ANOVA (MANOVA) design. The two independent variables for this MANOVA test are EHR category (whether an EHR is present or not in each hospital), and the teaching category of the hospital (non-teaching or minor teaching, and major teaching hospital), based on previous research [26]. The dependent variables include: acute myocardial infarction (AMI) mortality risk adjusted rate, heart failure mortality risk adjusted rate, pneumonia mortality risk adjusted rate consistent with analysis methods from previous research [18,27,28]

The MANOVA matrix will be created by processing the data files in several ways outlined below. The final matrix included six columns and one row for each hospital in the study. The columns are: (1) the hospital name, (2) teaching category (non-teaching and minor teaching, or major teaching), (3) EHR category (no EHR, or EHR), (4) AMI Risk Adjusted Rate, (5) Heart Failure Risk Adjusted Rate, (6) Pneumonia Risk Adjusted Rate.

A two-way multivariate ANOVA (MANOVA) design is the most appropriate to answer this type of question as the hospitals being evaluated are grouped in different ways. This grouping scheme (EHR or no EHR; non-teaching and minor teaching, or major teaching hospital) allows the inter-group differences to be evaluated statistically. These independent variables combined with several dependent variables allow the researcher to answer not only if the hospital groups have statistically significant differences between them but, by running post-hoc analyses, point to which combination of variables are significant along with their corresponding effect sizes. As this is essentially a groups comparison research design with largely continuous variables, other algorithms such as regression or chi-square would not allow the analysis of potential interaction effects between the two independent variables.

Population

The population of this study was a group of New York State hospitals with 2014 data. The total population of potential hospitals is 203 based on the New York hospital data available but the final MANOVA matrix included 62 hospitals. This was due to various data not being available for all hospitals. Of the 203 potential hospitals, 173 are Not for Profit Corporations, 13 are Municipalities, five are State, five are County, five are Business Corporations, and two are Public Benefit Corporations. Of the final 62 hospitals ultimately included in the analysis, 50 are Not for Profit Corporations, eight are Municipalities, three are State, and one is County.

Data Collection, Processing, and Analysis

The data used in this analysis are publicly available from CMS and the New York State data portal and includes the following five files: (1) NYS All Payer Hospital Inpatient Discharges data for the year 2014, (2) CMS Hospital Quarterly Measures file from the Hospital Compare data for the year 2014, (3) CMS Impact file data for the year 2014, (4) NYS Health Facility General Information data, and (5) NYS All Payer Inpatient Quality Indicators data for 2014. The data files from New York state are available to the public from that state’s data portal ((https://data.ny.gov/)) and the data files from CMS are similarly available to the public from the healthdata. gov data portal ( (https://www.healthdata.gov/)).

Since these files contain data that are at different levels, a certain amount of data processing was needed to join the data together between the files and to allow for the creation of the twoway MANOVA matrix for the ultimate analysis. The level of the data represents the lowest level of detail in each file. For example, some files have data at the hospital level, while other files have data at the individual inpatient discharge level. To analyze the data at the hospital level (since that is core of this research), rollups and aggregations must occur. All data preparation needed to create the final MANOVA matrix was done in KNIME (version 3.2.2).

Using a MANOVA (a priori sample size for MANOVA: special effects and interactions) with an effect size of 0.1, an alpha error probability of 0.05, a power rating of 0.8, with four groups, two predictors, and three response variables, G*Power shows a total sample size needed of 72 (“G*Power,” 2016). The effect size chosen is set to detect small to medium effects and larger. The number of hospitals in the sample is smaller than what is needed to detect an effect size of 10%, but since the sample is actually ~25% of the total population (of New York State hospitals), a case can be made that a 25% sample rate is large enough to detect even small statistically significant effect sizes, regardless of the actual number in the sample.

The MANOVA test performed was for the combined mortality risk adjusted rate. In this MANOVA, AMI mortality risk adjusted rate, heart failure mortality risk adjusted rate, and pneumonia mortality risk adjusted rate dependent variables were evaluated against the statistical assumptions of the MANOVA test. The first step was to evaluate outliers. For AMI mortality risk adjusted rate, there was one outlier that was greater than four standard deviations above the mean; it was removed. For heart failure mortality risk adjusted rate, there were a total of four cases that were either above or close to three standard deviations above the mean; these were also removed. For pneumonia mortality risk adjusted rate, no cases were above three standard deviations above the mean. After addressing the outliers in the data for these dependent variables, all three displayed normal distributions as evaluated by the Shapiro- Wilk test. After the removals, there were a total of 57 hospitals available for analysis.

The independence assumption of the MANOVA was evaluated using the Durbin-Watson test and found to be not statistically significant (p = .1848), which confirms the assumption of independence. The random sampling assumption was not directly addressed in this research design as all hospitals in the population (which was the State of New York) had some or all of the dependent variables publicly available. The deciding choice as to which hospital’s data were included was dependent upon which hospitals had data available for all dependent variables. Those hospitals that did not have a complete data set in 2014 for all dependent variables were excluded from the analysis.

Multivariate normality was evaluated using a multivariate version of the Shapiro-Wilk test. For this MANOVA, this assumption failed on three of the four MANOVA groups-EHR group (p = .004694), No EHR group (p = .1317), Major Teaching group (p = .01855), Non- Teaching or Minor Teaching group (p = .03063). Even though most of these dependent variables failed the multivariate normality test, performing log-transformation of all dependent variables actually made the problem worse (i.e., created a drastically less normal distribution than the non-transformed variables). The displayed Shapiro-Wilk test p-values are not generally that far from a normal distribution. Therefore, the MANOVA analysis will continue with these variables due to a lack of a robust two-way MANOVA test.

The final MANOVA assumption evaluated was the homogeneity of covariance matrices. The variance of the risk rates was determined to be similar between teaching category groups (as measured by the difference between largest and smallest values in each group). For AMI, the largest value is about 2.39 times bigger than the smallest. For heart failure, the largest value is about 1.44 times bigger than the smallest. For pneumonia, the largest is about 1.82 times larger than the smallest value. The generally accepted threshold value is 2.0 to satisfy this assumption [29].

The covariance of the risk rates was also similar between teaching category groups (as measured by the difference between largest and smallest values in each group). For AMI to heart failure (or heart failure to AMI), it is 1.99. For AMI to pneumonia (or pneumonia to AMI), it is 1.10. For heart failure to pneumonia (or pneumonia to heart failure), it is 1.28. These are below the threshold of 2.0.

The variance of the risk rates was similar between EHR category groups (as measured by the difference between largest and smallest values in each group). For AMI, the largest value is about 1.32 times bigger than the smallest. For heart failure, the largest value is about 1.14 times bigger than the smallest. For pneumonia, the largest is about 1.30 times larger than the smallest value. These are below the threshold of 2.0. The covariance of the risk rates was similar between EHR category groups (as measured by the difference between largest and smallest values in each group). For AMI to heart failure (or heart failure to AMI), it is 1.14. For AMI to pneumonia (or pneumonia to AMI), it is 3.36. For heart failure to pneumonia (or pneumonia to heart failure), it is 1.15. These are generally below the threshold of 2.0.

Result

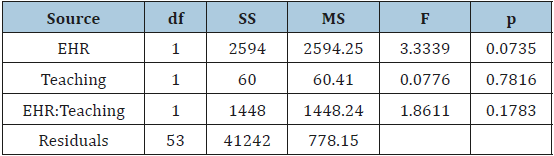

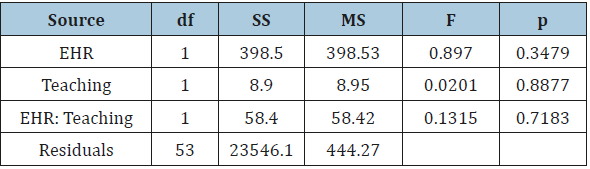

The previously stated research questions are listed below, and each is evaluated in the light of the MANOVA analysis. Tables 1, 2, & 3 below outline the AMI, heart failure, and pneumonia MANOVA results. Table 4 outlines the one-way ANOVA results for pneumonia mortality rates at teaching hospitals.

Table 1:Two-Way Multivariate Analysis of Variance of Risk-Adjusted AMI Mortality Rates.

Table 2:Two-Way Multivariate Analysis of Variance of Risk-Adjusted Heart Failure Mortality Rates.

Table 3:Two-Way Multivariate Analysis of Variance of Risk-Adjusted Pneumonia Mortality Rates.

Table 4:One-Way Analysis of Variance of Risk-Adjusted Pneumonia Mortality Rates in Teaching Hospitals.

I. RQ1. How much of an effect do EHRs have on hospital mortality rate as measured by AMI mortality risk adjusted rate?

After running the initial data exploration and analysis, the combined mortality risk adjusted rates MANOVA residuals were normally distributed (p = .09922). The MANOVA analysis was not statistically significant for AMI mortality risk adjusted rate (F (3, 53) = 3.33, p = .0735). Therefore, we fail to reject the null hypothesis-no relationship detected.

II. RQ2. How much of an effect do EHRs have on hospital mortality rate as measured by heart failure mortality risk adjusted rate?

After running the initial data exploration and analysis, the combined mortality risk adjusted rates MANOVA residuals were normally distributed (p = .09922). The MANOVA analysis was not statistically significant for heart failure mortality risk adjusted rate (F (3, 53) = .897, p = .3479). Therefore, we fail to reject the null hypothesis-no relationship detected.

III. RQ3. How much of an effect do EHRs have on hospital mortality rate as measured by pneumonia mortality risk adjusted rate?

After running the initial data exploration and analysis, the combined mortality risk adjusted rates MANOVA residuals were normally distributed (p = .09922). The MANOVA analysis was not statistically significant for pneumonia mortality risk adjusted rate (F (3, 53) = .5902, p = .44575). Therefore, we fail to reject the null hypothesis-no relationship detected.

IV. RQ4. How much of an effect does teaching status have on hospital mortality rate as measured by AMI mortality risk adjusted rate?

After running the initial data exploration and analysis, the combined mortality risk adjusted rates MANOVA residuals were normally distributed (p = .09922). The MANOVA analysis was not statistically significant for AMI mortality risk adjusted rate (F (3, 53) = .0776, p = .7816). Therefore, we fail to reject the null hypothesis-no relationship detected.

V. RQ5. How much of an effect does teaching status have on hospital mortality rate as measured by heart failure mortality risk adjusted rate?

After running the initial data exploration and analysis, the combined mortality risk adjusted rates MANOVA residuals were normally distributed (p = .09922). The MANOVA analysis was not statistically significant for heart failure mortality risk adjusted rate (F (3, 53) = .0201, p = .8877). Therefore, we fail to reject the null hypothesis-no relationship detected.

VI. RQ6. How much of an effect does teaching status have on hospital mortality rate as measured by pneumonia mortality risk adjusted rate?

After running the initial data exploration and analysis, the combined mortality risk adjusted rates MANOVA residuals were normally distributed (p = .09922). The MANOVA analysis was statistically significant for pneumonia mortality risk adjusted rate (F (3, 53) = 5.17, p = .02703, η2 = .0936). A second one-way individual ANOVA using only the pneumonia mortality risk adjusted rate dependent variable was used to determine the effect size. The statistical assumptions of this second one-way ANOVA were evaluated and confirmed. The mean for major teaching hospitals was 56.47 mortalities per 1,000 patient discharges with a standard deviation of 17.68. The mean for non-teaching or minor teaching hospitals was 43.13 with a standard deviation of 23.86. Therefore, we reject the null hypothesis-a relationship was detected.

VII. RQ7. How do major and minor teaching (to include nonteaching) hospitals’ mortality rate compare with and without an EHR as measured by AMI mortality risk adjusted rate?

The two-way MANOVA analysis was not statistically significant for interaction effects between EHR status and teaching status related to AMI mortality risk adjusted rate (F (3, 53) = 1.86, p = .1783). The MANOVA residuals were normally distributed based on the Shapiro-Wilk normality test (p = .09922). Therefore, we fail to reject the null hypothesis-no relationship detected.

VIII. RQ8. How do major and minor teaching (to include nonteaching) hospitals’ mortality rate compare with and without an EHR as measured by heart failure mortality risk adjusted rate?

The two-way MANOVA analysis was not statistically significant for interaction effects between EHR status and teaching status related to heart failure mortality risk adjusted rate (F(3, 53) = .1315, p = .7183). The MANOVA residuals were normally distributed based on the Shapiro-Wilk normality test (p = .09922). Therefore, we fail to reject the null hypothesis-no relationship was detected.

IX. RQ9. How do major and minor teaching (to include nonteaching) hospitals’ mortality rate compare with and without an EHR as measured by pneumonia mortality risk adjusted rate?

The two-way MANOVA analysis was not statistically significant for interaction effects between EHR status and teaching status related to pneumonia mortality risk adjusted rate (F (3, 53) = 1.78, p = .18754). The MANOVA residuals were normally distributed based on the Shapiro-Wilk normality test (p = .09922). Therefore, we fail to reject the null hypothesis-no relationship was detected.

Discussion

These findings indicate that EHRs play no statistically significant role on their own in New York State hospitals as they relate to mortality for AMI, heart failure, and pneumonia adult inpatients. These results for the EHR independent variable are surprising given the common assumption that these advanced information systems should reduce mortality, increase quality, and lower costs [16,30]. On the other hand, it was discussed earlier that EHR adoption effects are nuanced and conflicting in existing literature, so it is not all that surprising to see that there is no statistically significant effect on these hospitals.

The results of the analysis for the pneumonia mortality risk adjusted rate highlight an important distinction and a break from the long-held defense that major teaching hospitals receive the sickest patients and, therefore, cost more, are less efficient, and possibly have higher mortality. The mortality risk adjusted rates used in this study are computed to account for the illness level of the patient. So, theoretically, a pneumonia mortality risk adjusted rate value can be compared from one hospital to another regardless of the actual illness level of individual pneumonia patient populations seen at each institution. In the analysis of pneumonia mortality risk adjusted rate, major teaching hospitals had a higher risk adjusted mortality rate (M = 56.47, SD = 17.68) versus non-teaching or minor teaching (M = 43.13, SD = 23.86). The effect size was 9.36%, meaning that about 9% of the variance of the difference in rates is correlated with teaching status of the hospital.

Teaching hospitale Effects

The first teaching hospital effect found was in the quality dimension of pneumonia mortality risk adjusted rate. There was a statistically significantly higher rate of pneumonia mortality at major teaching hospitals in New York State. The effect size was small (~9.36%) but present. This effect size is interpreted as 9.36% of the variance in pneumonia mortality risk adjusted rate between groups is correlated with teaching status. This finding is interesting as the rate was already risk adjusted to account for the sickness of the patient-which means a hospital that receives sicker pneumonia patients is not penalized in this calculated rate as compared to hospitals that receive less sick pneumonia patients. There was not a statistically significant finding observed for the other two measurements of quality (AMI mortality risk adjusted rate and heart failure mortality risk adjusted rate) at teaching hospitals. One of the possible explanations for this difference only appearing for pneumonia and not for AMI or heart failure could be the method whereby pneumonia mortalities are recorded at these organizations. For example, do major teaching hospitals more accurately attribute pneumonia mortality as compared to nonteaching or minor teaching hospitals? Do non-teaching or minor teaching hospitals incorrectly attribute legitimate pneumonia mortality to some other disease process? These are possible examples of a type of measurement error possibly introduced by the individual hospitals in New York State.

When investigating the hospital teaching status effect on the quality of healthcare services, the extant literature from the last five years is similarly conflicting. There are studies that show lower quality, mixed or no effect on quality [27,31-34] and higher quality at teaching hospitals [35,36] Generally, the theme in the recent literature is that teaching status either favors lower mortality or has a mixed effect or no effect on mortality. The findings of this research study demonstrate there is a small correlation between teaching status and higher pneumonia mortality, which is contrary to the majority of recent literature.

EHR effects

The extant recent literature surrounding EHR effects on the quality of patient care generally show mixed results. For example, EHR use has shown to provide mixed results along the dimension of quality of healthcare service delivery. There are examples where EHR use positively affected (lowered) mortality, reduced hospital readmissions, and reduced medical errors [14] On the other hand, there were examples of mixed quality performance due to EHR use, where there was either no significant difference in performance after EHR adoption or there was an increase in quality in one area while there was a decrease in quality in another area [21]. This research study did not find any statistically significant findings for EHR effects on quality as measured by AMI, heart failure, and pneumonia risk adjusted rates. This is remarkable given the commonly circulated idea that EHRs can significantly help with patient care in a number of ways. The findings of this research study support the group of literature that demonstrates EHRs have no significant effect on the quality of patient care as measured by AMI, heart failure, and pneumonia risk adjusted rates in New York State hospitals.

Interaction effect

There was no extant literature found discussing possible interaction effects of EHR status and teaching status. The findings of this research study demonstrate no statistically significant interaction effect between EHR status and teaching status of hospitals (as measured by AMI, heart failure, and pneumonia risk adjusted rates) in New York State hospitals. The implication appears to be that hospital leadership can approach EHR implementations in their hospitals as distinct projects and do not need to frame those EHR projects in the light of their organizational teaching status. There appears to be no discernable effect between these predictors.

Recommendations for practice

There are several recommendations for practice that come out of this research. These recommendations will allow those in the healthcare industry to potentially focus their EHR projects, taking into consideration the effects those information systems have on the delivery of care as well as having a fuller understanding of how these systems may or may not interact with their type of hospital. Additionally, it is valuable to understand the differences between types of hospitals when it comes to the quality of healthcare service delivery.

As previously mentioned, one of the most striking outcomes of this research is that EHR status has not been shown to correlate with an increase in quality as measured by AMI, heart failure, or pneumonia mortality risk adjusted rates. Though the existing literature is relatively conflicting, this research study supports the position that EHRs do not have a significant impact in this area. Of course, there are many potential explanations for this. For example, just the fact that an organization has implemented and is using an EHR does not mean that organization is using it well or fully. Perhaps the healthcare industry must mature in its processes and workflows in order to fully utilize these complex information systems and, therefore, realize the potential benefits for their patients. On the other hand, it is comforting to see that EHR status does not have a statistically significant negative impact on healthcare service delivery, either. So, the first recommendation for practice for healthcare leaders is to begin to explore and fully utilize EHRs in their internal processes and workflows to potentially see patient care benefits.

It has also been shown in this research study that there is no detectable interaction effect between EHR status and teaching hospital status. In effect, what this means is that hospital leadership should likely approach EHR implementations as separate projects, independent of the type of hospital where it is implemented. For example, if a large health system conglomerate has a dozen hospitals, with a mix of both major teaching hospitals and nonteaching or minor teaching hospitals, that health system can likely treat each hospital EHR implementation in a similar manner, regardless of the teaching status of that particular hospital. This is the second recommendation for practice.

The one surprising aspect of this research is in the quality dimension for pneumonia patients treated at major teaching hospitals. As was shown, pneumonia mortality risk adjusted rates are higher at major teaching hospitals, but the observed effect size is small. Given that these mortality risk adjusted rates should take into consideration the sickness of patients, major teaching hospitals’ rates should be similar to non-teaching or minor teaching hospitals’ rates if the actual mortality rates were the same. Since there is a difference, hospital leadership should consider how pneumonia patients are treated at major teaching hospitals. This is the third and final recommendation for practice.

Recommendations for Future Research

The first recommendation for future research is to investigate possible measurement error in the pneumonia mortality risk adjusted rate measure. It has been shown in this research study that pneumonia risk adjusted rates are higher at major teaching hospitals. One possible explanation for this higher observed rate is that there is some form of bias in the definition or collection of this measurement in different hospitals. For example, do major teaching hospitals more accurately attribute pneumonia mortality as compared to non-teaching or minor teaching hospitals? Do non-teaching or minor teaching hospitals incorrectly attribute legitimate pneumonia mortality to some other disease process? These are possible examples of a type of measurement error by the individual hospitals in New York State. Future research should attempt to refine or control this measure to determine if this difference in pneumonia mortality actually exists.

The second recommendation for future research is to look longitudinally at EHR use at hospitals over time. Instead of the simplistic EHR attribute group used in this research study, future research could employ a repeated measures research design where the same hospitals are tracked over the course of several years, looking at EHR use and its possible effect on quality, cost, and efficiency. In such a research design, it may be possible to measure the differences in quality, cost, and efficiency of healthcare service delivery over time. Researchers would need to also have some measure of how integrated the EHR has become in the hospital processes and workflows to attribute the changes to EHR use and not to other process improvement or quality improvement initiatives occurring at the hospital.

Limitations

There are several limitations to this study. For example, this data primarily comes from New York State for the risk adjusted mortality rates for the disease conditions of interest. Since the data primarily comes from a single state, and that state imposes certain regulations on its healthcare organizations not imposed by other states (e.g., CON constraints), the generalizability of these results may be limited to states with similar regulatory and patient population characteristics. This analysis is also limited to New York State hospitals and is based on adult inpatient discharges for the year 2014. The ultimate findings must be viewed through this lens. For example, outpatient discharges and inpatient discharges of children are not included in the data set and any findings cannot be automatically generalized to those populations.

References

© 2019 Eduard Babulak. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and build upon your work non-commercially.

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

.jpg)

Editorial Board Registrations

Editorial Board Registrations Submit your Article

Submit your Article Refer a Friend

Refer a Friend Advertise With Us

Advertise With Us

.jpg)

.jpg)

.bmp)

.jpg)

.png)

.jpg)

.jpg)

.png)

.png)

.png)