- Submissions

Full Text

COJ Robotics & Artificial Intelligence

Can we Leave Care to Robots? An Explorative Investigation of Moral Evaluations of Care Professionals Regarding Healthcare Robots

Margo AM van Kemenade1*, Elly A Konijn2 and Johan F Hoorn3

1Department of Applied Science, Netherlands

2Department of Communication Science, Netherlands

3Department of Computing and School of Design, Netherlands

*Corresponding author: Margo AM van Kemenade, Department of Applied Science, Netherlands

Submission: December 15, 2020;Published: February 17, 2021

ISSN:2832-4463 Volume1 Issue3

Abstract

Through a qualitative examination, the moral evaluations of Dutch care professionals regarding healthcare robots for eldercare in terms of biomedical ethical principles and non-utility are researched. Results showed that care professionals primarily focused on maleficence (potential harm done by the robot), deriving from diminishing human contact. Worries about potential maleficence were more pronounced from intermediate compared to higher educated professionals. However, both groups deemed companion robots more beneficiary than devices that monitor and assist, which were deemed potentially harmful physically and psychologically. The perceived utility was not related to the professionals’ moral stances, countering prevailing views. Increasing patient’s autonomy by applying robot care was not part of the discussion and justice as a moral evaluation was rarely mentioned. Awareness of the care professionals’ point of view is important for policymakers, educational institutes, and for developers of healthcare robots to tailor designs to the wants of older adults along with the needs of the much-undervalued eldercare professionals.

Introduction

According to the European Commission [1], the number of older adults, aged 80 years and older, will increase by 170% in 2024. The EU’s old-age dependency ratio has been increasing for a long time. The old-age dependency ratio is traditionally seen as an indication of the level of support available to older persons (those aged 65 or over) by the working age population (those aged between 15 and 64). In the EU, the old-age dependency ratio was 29.9% in 2017 [1]. Older adults must stay independent longer than before because it has become harder for them to register for a nursing home. Today, an individual needs a strong indication from a doctor to apply for a stay in a nursing home [2]. Although older persons prefer to live independently in their homes, they might need additional assistance [3]. Acti Z [4], an organization of healthcare entrepreneurs, claims that, since 2015, 322,000 older adults have been unnecessarily admitted to a hospital or residence, resulting in avoidable stays. Pilot projects that employ the unemployed to alleviate the care pressure are met with great concern as unqualified and possibly unmotivated individuals would be attending vulnerable people [5]. A way forward could be the utilization of innovation and new media technologies to ensure the quality of healthcare, research shows that the implementation of a healthcare robot could offer part of a solution, [6-8] although specific ethical considerations and moral dilemmas must be made clear. Wijnsberghe [9] stated that “the prospective robots in healthcare intended to be included within the conclave of the nurse-patient relationship require rigorous ethical reflection to ensure their design and introduction do not impede the promotion of values and the dignity of patients at such a vulnerable and sensitive time in their lives. The ethical evaluation of care robots requires insight into the values at stake in the healthcare tradition.” A robot could fill the gap between the need and supply of healthcare. On the one hand, robots are already used in hospitals, most often robots to assist with surgery [10]. On the other hand, robots employed in care instead of cure, for instance, social robots that can talk, express emotions and converse with people, to accompany lonely older adults, are considered to be a novelty and are subject to this study (specified further on).

Although the patient perspective is most important, older adults are not the only stakeholders in the healthcare system. Care professionals will also work with robots to make an effective team to serve older adults optimally. So far, there has been little attention to the attitudes of care professionals towards healthcare robots [11], whereas patients’ views are frequently recorded [12]. Several authors [3,6,9,11,13] have discussed the effects of social and assistive healthcare robots on patients, but the effect they may have on professionals who work with the technology is understudied. Hence, the focus of the current study is how care professionals in eldercare perceive the introduction of healthcare robots. Among others, we wanted to know whether the ethical evaluation by care professionals while using healthcare robots for older adults might be biased by evaluations of utility. For example, will perceived utility have an influence on the professionals’ moral stance? A widely embraced ethical theory today is utilitarianism, suggesting that whatever produces the most utility is the morally best thing to do [14]. Hence, it could be expected that when the perceived utility of a care robot is high, the moral concerns of the caregivers vanish. In other words, the robot may be considered morally reprehensible for caregivers, but may still be evaluated as useful, for instance, when more older adults can receive care when using healthcare robots. A thorough understanding of the wishes and objections of professional users can contribute to a successful implementation of robots in healthcare, serving the older adults best by taking potential moral objections of the professionals into account during early stages of development.

Problem statement and observations from the public debate

From our pre-research phase we learned that a pessimistic point of view prevailed; public debate stated that healthcare robots could never provide meaningful care. For example, online reactions to a newspaper article about a healthcare robot in our research [15] varied from “What a ridiculous idea! Robots for physical labour, okay, but for the more social care tasks, you really need a human” to “What a horrible thing. Who came up with this? Who wants a machine to take care of older adults?” We aimed to examine such views in a more scientific way. Thus, we used expressions of social concern and potential hazard about healthcare robots coming from general media as a starting point underlying our choice to study this particular research group. Therefore, our main question will center around the following question: According to care professionals, is it acceptable to leave care to the responsibility of robots? To measure the moral attitudes of caregivers, we translated the possible moral evaluations into the principles of biomedical ethics as stated by Beauchamp et al. [16]. In both clinical medicine and scientific research, it is held that these principles can be applied, even in unique circumstances, to provide guidance in discovering moral duties within a situation [17]. Beauchamp et al. [16] proposed a system of four moral principles to reason from values towards judgments in the practice of medicine.

A. Non-maleficence is discerned as the doctrine of “first,

do no harm,” which means that no treatment is better than doing

something wrong.

B. Autonomy is the capacity of patients to make an informed,

un-coerced decision about their care.

C. Justice pertains to the fair distribution of scarce health

resources (e.g., time, attention, and medication).

D. Beneficence means acting in the best interests of one’s

patients. We applied these principles to the introduction of

healthcare robots in eldercare.

Additionally, for our research purposes, we translated these

four biomedical principles into the following:

a) Does it do harm? (non-maleficence),

b) Does the patient benefit from the treatment? (beneficence),

c) Is the patient’s autonomy increased? (autonomy), and

d) Is the treatment fair and available for all? (justice).

On the other hand, if care professionals would see the benefits of robots in daily practice, we expect their moral evaluations to be more nuanced. This is based on the concept of Utilitarianism [14]. In other words, if the robot is perceived as useful, then care professionals are likely to evaluate care robots positively. Thus, when the perceived utility is high, moral concerns of potential maleficence will be less. Therefore, we also examined how expected usefulness or inefficiency would compare to the moral evaluations of care professionals. For our research purposes, we choose to classify care robots according to Sharkey et al. [18]. These authors proposed that robots in care can be divided into three categories.

The three main ways in which robots might be used in eldercare are:

a) To assist older adults, and/or their caregivers in daily

tasks.

b) To help monitor their behavior and health; and

c) To provide companionship [18].

Following these ideas, our research question is stated as: How do professional caregivers in eldercare evaluate assistive, monitoring, and companion robots in terms of the four biomedical principles as stated by Beauchamp and the possible utility a care robot could provide?

Methods

Participants and procedure

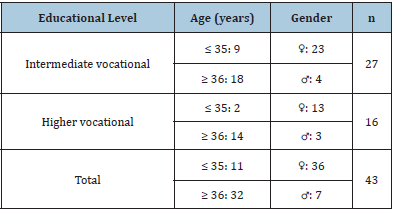

We applied the VU University’s “code of ethics for research in the social and behavioral sciences involving human participants” [19] in designing and executing this study. Our methods have addressed all ethical considerations and is in compliance with this guideline. We wanted to ask care professionals directly about their moral assessment of care robots. To make them feel at ease and keep their thoughts fluid, we conducted semi-structured focus group sessions in the habitual work environment of the participants. For triangulation purposes, prior to the focus groups, we performed some pretests, including two test-focus groups, with different participants and two in-depth interviews with caregivers to ensure the research material and questions were useful and comprehensible for discussion for all the participants. These pretests were all recorded and led to some minor adjustments in the research protocol. The final participants in the focus groups included 43 professional care givers (Table 1) distributed over six focus sessions (five to ten participants each), each lasting for three hours.

Table 1:Relevant characteristics of the participants in the focus groups.

Our inclusion criteria were: working in a nursing home taking care of patients suffering from dementia, speaking Dutch, and having no prior experience with any kind of robot-technology. Since it is important to know how variables like age, religion, experience or education would possibly influence the attitudes of caregivers, we decided to not select on these criteria but include them in the analyses. Participants were encouraged to speak freely about the use and apparent utility of the robot technology (as presented in the materials section). They were also encouraged to express their ethical concerns. Effort was made to ensure that everybody could talk freely.

Data collection and materials

Prior to the focus group sessions, participants were asked to answer questions in a paper-pencil questionnaire about their gender, age, religion, care setting, number of years worked in the care domain, number of hours worked per week, education, acquaintance and affinity with new technology as exemplified by their use and replacement behaviour of cell phones and computers. The researchers pre-categorized the demographics to make crossreferences comprehensible (Table 2 & 8). We recorded all focus groups resulting in nearly 12 hours of raw data during which care professionals discussed the implications of the demonstrated use of care robots.

At the start of each focus group, participants were reassured that their answers were confidential and would be processed anonymously for research purposes only. Next, participants were shown video clips of specific care robots, as described below. Six video clips of care robot prototypes were used to ensure that all participants saw the same materials. We compared a variety of robot types (i.e., assisting, monitoring, and companion robots; see below) to explore how the care professionals would differ in their evaluation of each type. After each clip, the videotape was stopped, and a discussion took place. What did the participants think about the shown technology? If this robot were available, would they use it? If not, what would be their considerations? Would they shown robot come in handy in daily care practice? And if it did come in handy, would they use it or were there other deliberations that would still account for not wanting to use this type of care robots? Effort was made to ensure that all participants felt that they could talk freely and display all the possible concerns of future use they might have. All the six focus groups were videotaped throughout the whole session, thus yielding approximately 12 hours of rough data. Once the session leader felt that no new arguments on a certain type of robot were given, the session was ended for that part. The first and second video clips and discussions concerned an assistive robot, and the third and fourth were monitoring robots, followed by the final two clips presenting different companion robots. The videos the participants viewed in order were as follows:

Assistive robots:

a. Panasonic hair-washing robot.

b. Riba II Care Support Robot for Lifting Patients.

Monitoring robots:

a.Mobiserv, a robot equipped with cameras and touch

sensors to help structure the day.

b. NEC PaPeRo (Partner-Type Personal Robot), a

telecommunication robot.

Companion robot:

a. Fujitsu’s teddy bear robot.

b. AIST Paro, a therapeutic robot baby harp seal.

Three independent raters, not present at the focus groups, were

trained to obtain a common understanding of the coding sheet, who

then worked independently using the Atlas Ti software package

to code the available remarks out of the video-data. Prior to this

coding, we established inter-rater reliability to verify the extent

to which the three independent raters evaluated the discussions

consistently. To do this Cohen’s kappa coefficient was calculated

in pairs per time slot. That is, video footage of the sessions was

broken down into segments of about two minutes each. Before

starting the actual frequency count and categorization, twenty

segments were randomly drawn from the session’s video footage to

establish Cohen’s kappa among the three raters per epoch. Cohen’s kappa was never smaller than .71, indicating satisfactory reliability

of the coding. The three raters could now safely code the 12 hours

of rough data independently. The raters worked straight from

the video footage of the focus sessions. The codes that the raters

used would lead to the dominant remarks regarding potential

maleficence, autonomy, justice, and beneficence. They also coded

statements about potential utility or non-utility. Remarks about

healthcare robots that did not fit into one of the above categories

were coded as “miscellaneous”. They were put in a separate file and

not considered for further analysis.

To examine whether the combination of variables would lead to

other moral evaluations regarding the three different care robots,

tables were created for cross-referencing between all background

variables. To avoid an uneven distribution of remarks coming

from talkative persons, the raters were instructed to record only

one dominant remark per person per care robot. This dominant

remark was established by counting the most common remarks

given by one person on the subject of one care robot. For instance,

if a caregiver, when discussing a particular robot-type, expressed

3 remarks leading to potential maleficence and one remark

leading to possible beneficence, maleficence was recorded as most

prevailing attitude. Since we had shown six care robots, a total

of six remarks could come from one person. By limiting the total

amount of remarks per person, we were able to safely compare the

different categories to see which attitudes prevailed and to link

these with demographics. A total of 102 remarks were coded. To

make comparisons easier not only the absolute number of times

a remark was mentioned was coded, but we also translated these

absolute figures into percentages to get a proportional view of the

matter (Tables 2-8).

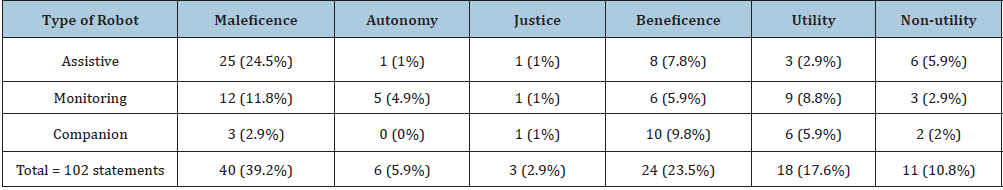

Table 2:Frequencies and percentages of moral and utility statements over all focus groups.

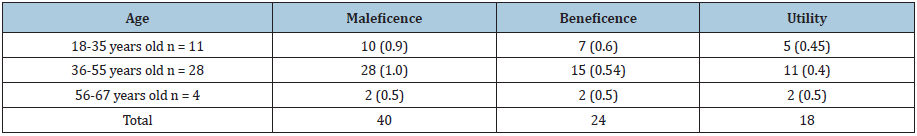

Table 3:Frequencies of moral and utility statements per age category. The mean number of statements per person are included in parenthesis.

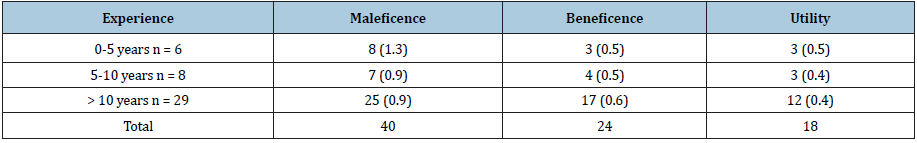

Table 4: Frequencies of moral and utility statements per years-of-experience category. The mean number of statements per person are included in parenthesis.

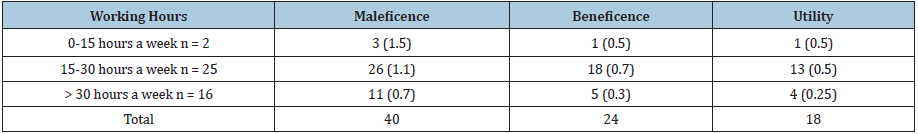

Table 5:Frequencies of moral and utility statements per working hours. The mean number of statements per person are included in parenthesis.

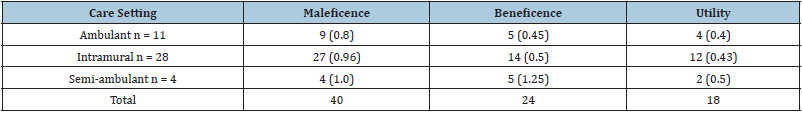

Table 6:Frequencies of moral and utility statements per care setting. The mean number of statements per person are included in parenthesis.

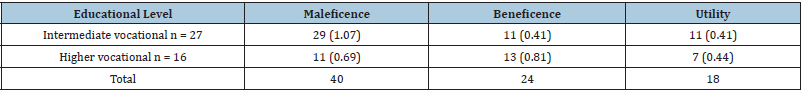

Table 7:Frequencies of moral and utility statements per educational level. The mean number of statements per person are included in parenthesis.

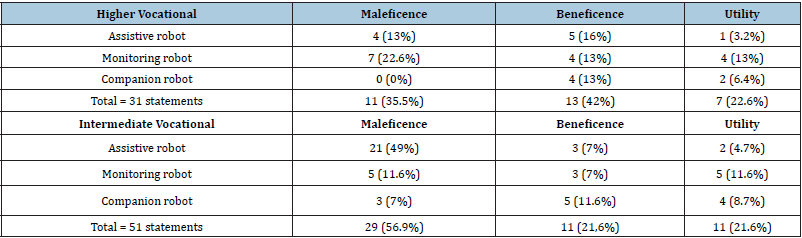

Table 8:Frequencies and percentages of moral and utility statements of higher and intermediate vocational educated care professionals for each robot type.

Result

The analyses included 43 participants of whom 83.7% were women, and 17.3% were men. Most participants were older than 36 years of age and had intermediate vocational training (Table 1). Because the different groups had different sample sizes, we calculated the mean number of statements per person (e.g., 10 statements/11 people=0.9 statements per person). Based on these relative numbers, we could make comparisons between those groups to see which group is more concerned about what. Table 2 shows the number of times a statement was made about a healthcare robot according to the coding of moral evaluations. Overall, maleficence was identified 40 times followed by beneficence (24), utility (18), non-utility (11), autonomy (6), and justice (3). For companion robots, maleficence was mentioned least (3) and beneficence most (10).

To determine the degree to which the discussion covered a topic, we calculated relative percentages per cell in Table 2. Since there were too little remarks on the subjects of Autonomy, Justice and Non-utility we decided to exclude them from further analyses, thus remaining three variables under research. Remarks on potential maleficence, potential beneficence and potential utility were used to cross-analyze them with three types of robots and demographics, rendering the results in tables mentioned. Table 2 suggests the moral assessments of care robots as expressed by care professionals focused primarily on maleficence, whereas patient autonomy and justice were rarely mentioned. Therefore, we decided to exclude autonomy, justice, and non-utility from further statistical analyses. Because we had little male participation (17.3%), we could not specifically analyse gender.

Demographics in relation to moral statements

To examine how combinations of variables would lead to

different moral evaluations, tables were created to make crossreferences

with the background variables (Tables 3-8). The

tables are based on absolute and proportional representations

of participants’ statements related to two of the principles of

Beauchamp and their statements on utility [16]. In Table 7, the

care professionals with intermediate educational level mentioned

maleficence the most, while those with a higher vocational

background mentioned beneficence more often. Utility was

about equal in the discussions. To further explore the different educational levels, we crossed higher and intermediate vocational

care professionals with robot types to investigate who fears which

robot the most or sees the most use (Table 8).

Overall, the key focus of discussion among the care professionals

was the potential of robots for maleficence (Table 2). Participants

were concerned that robots may do harm. Surprisingly, patient

autonomy was rarely mentioned. Care professionals between 36

and 55 years of age mentioned maleficence more often than the

younger and older participants (Table 3). Care professionals with

five years of experience or less were mostly deliberating about the

maleficence of robots and less about beneficence and utility (Table

4). The same occurred for care professionals with the fewest number

of working hours per week (0 to 5 hours- Table 5). Semi-ambulant

care professionals mentioned beneficence and utility of care robots

the most (Table 6). Intermediate vocational care professionals

mentioned maleficence the most (Table 7), particularly for assistive

robots (Table 8). Higher vocational care professionals mentioned

maleficence the most in response to monitoring robots (Table 8).

For both groups, companion robots did not evoke discussions about

potential maleficence (Table 8).

Discussion and Conclusion

We found that maleficence dominated the discussion from

nearly any configuration we analysed the data (Tables 2 & 8). The

only exception was for companion robots (Table 8), which were

not seen as harmful but beneficial. Almost all respondents found

Paro, the robot seal, very touching and could imagine themselves

working with it. It could act as a supplement to get patients with

dementia out of their anxiety or agitation or to entertain them.

Moreover, they expected that the patients themselves would like

Paro very much. The way patients would react and perceive the

robots turned out to be one of the determining elements for the

acceptation of companion robots in the caregivers’ workplace. Like

one respondent said: ‘‘I would say that if the patient comes first, our

perception is secondary.’’ Thus, it also depends on the type of robot

whether to see harm or not.

Our data showed that evaluations of autonomy and justice

were negligible, meaning hardly ever mentioned, during the

care professionals’ deliberations about care robots (Table 2).

Importantly, care professionals may have a divergent point of view,

on the notion of autonomy, then patients in this regard. For instance,

when it comes to employing the use of an assistive washing robot,

enabling an elderly patient to wash himself without any assistance.

A healthcare professional indicates: “we know how to react to the

elderly and the elderly will feel more comfortable with someone

who he/she can trust, during a bathing situation, when somebody

is naked. A robot could never replace a human, because the robot

could not provide that feeling of trust.” On the other hand, one

could argue that enabling a patient to bath independently or not

being dependent on the availability of a care professional enhances

patient’s autonomy. Our participants, however, did not express this

as a potential advantage. Returning someone to a state of greater

independence is certainly compatible with autonomy; however,

following Sorell [20] the question is whether it is compatible with

autonomy for a care robot to coerce someone to adhere to regimes

that will return them to greater independence. Jenkins [21]

conclude that older people’s autonomy can be limited in the short

term in order to protect their longer-term autonomy [21].

Our participants expressed few thoughts concerning the

principle of justice, which governs the fair distribution of scarce

resources as well as the attribution of responsibility and liability

when something goes wrong [22] When a robot does not behave

as intended, it could be the result of human error or robot

malfunctioning [23]. Others may feel that robots are so expensive

that only a few may benefit, thus bringing unequal distribution of

care and attention. Feelings about a violated principle of justice

where not expressed. We found that particularly companion robots

were regarded as beneficent and non-maleficent (Table 8). Although

maleficence dominated the discussions, beneficence was not absent

and sometimes prominently present. Utiliarism states that, when

the perceived utility of a care robot is high, moral concerns would

subside. However, we did not observe this relationship. Perceived

utility was high as was the fear for maleficence. The notion of

non-utility was negligible (Table 2). Participants stated that, as a

condition, the robot should only support them, instead of replacing

them. They want to be able to determine when to employ the robot,

to use it as a tool, instead of the robot automatically performs tasks.

It could be very useful to have a robot that could lift somebody from

the ground, but as a participant explained: ‘‘I have had patients who

did not want to sleep in bed, but on the ground. And if you have

a robot that always picks up somebody then you keep being busy.

Besides, imagine that someone felt, then it is not always a good

idea to lift somebody.’’ Feeling responsibility is not the only reason

why caregivers would like to see the care robot as a supporting

tool instead of a replacement. They pointed out that with patients

suffering from dementia, their emotional state can change suddenly

and that it is important to reconsider the use of the care robots in

every situation again.

We conducted focus groups with care professionals to

understand their ethical and utility considerations about robot

technology in care. It is important to complement knowledge

about the patient perspective with the views of care professionals

who, together with the robot, should form the best possible team

to serve older adults optimally. The results showed that the main

concern of the care professionals under study was the potential

maleficence of assistive machines (i.e., physical damage such as

dropping, or emotional damage such as loneliness). Caregivers

felt very responsible for their patients, so not being there with the

patients when the robots executed tasks was not an idea caregiver

embraced. As one respondent admitted: ‘‘If something goes wrong

[with my patients], I will feel very guilty.’’ This showed to be less of

a concern with respect to monitoring devices and of least concern

with companion robots. In fact, companion robots were regarded

as the most beneficial of the three types of robots reviewed. With companionship robots, participants saw opportunities to finish

additional tasks, like administration or doing the dishes (which

belongs to the nursing home caregivers’ tasks), without having the

feeling that they fall short to their patients, because they were closeby.

With respect to monitoring robots (cf. tele-presence), caregivers

recognized that this type of robot could alleviate the workload.

Nonetheless, concerns about loneliness and other adverse effects

on the patient prevailed: “I see my patients once a day in their own

homes. Sometimes I am the only one they see throughout the whole

day. If even I would stop coming, they will be so lonely.” Surprisingly,

privacy issues were not mentioned but rather the deterioration

of human contact. Care providers are afraid of losing personal

interaction with the patients. For instance, Sharkey et al. [18] found

that caregivers fear that through robots, detailed and caring human

interaction is lost.

Having contact and interaction with elderly patients was to the

greater part of our participants the most valuable element of their

job. Employing a robot could deprive elderly patients and caregivers

alike of even more human contact. Depriving human contact was

seen as potential maleficent, thus explaining the main concern of

the care professionals under study. Diminishing human contact is,

on a societal scale, frequently seen as a threat to humanity [24].

Good care is adding humanity to care. One participant even had her

own formula for good care: ‘‘Good care is 50% being professional

and 50% empathy’’. Humanity to participants had to do with

emotional dedication. It was about sympathizing with the patients’

emotions, providing the right support at the right moment and being

interested in your patients. Companion robots are not witnessed as

violating this notion of good care, whereas monitoring and assistive

devices could diminish patient – care providers contact and as a

result also diminishing human contact.

Although the care professionals saw benefits with companion

robots, the literature thus far expressed concerns about deception

and dignity [9,12,13,22,25]. However, none of our professional

participants expressed such objections as they considered the use

of companion robots as “good care” and beneficial for patients, for it

did not diminish human contact. From the discussion, the following

quote is illustrative: “It is true that you are misleading your patient,

but I see no harm in that. After all, we allow our children to carry

their cuddly toys around everywhere, so we must allow our older

adults to do the same if they want to.” Discussing the demographics

of the care professionals studied, we observed that care givers with

less experience (Table 4), small contracts (Table 5), and not highly

educated (Table 7) are more concerned about potential harm of

the robot than people such as semi-ambulant professionals (Table

6) who focused more on beneficence. When we zoomed in on the

differences between higher and intermediate vocational trained

care professionals (Table 8), we saw that the intermediate group

was more negative about robots than the higher educated group.

It might be that the care professionals in our sample, particularly

the lower educated, underestimated the possibilities of healthcare

robots.

Whereas intermediate vocational care professionals were

focusing on maleficence as the leading ethical principle, the care

professionals who received a higher level of education seemed to

be led by potential beneficence. This information could be useful to

educational institutes in the care domain, since knowledge on the

ethical and practical implications of working with robots seems to

lessen the fear for this kind of technology [26]. When concluding,

however, we still need to be careful about the robustness of the

results. Because of the nature of the focus group methodology

and a relatively small sample size, we should consider these

results as tentative and as directions for future research. More

robust methodological and statistical techniques, requiring larger

sample sizes, should deal with the reliability and validity issues

encountered in the current exploration. In summary, the current

study provides preliminary insights into the moral objections as

well as the unexpected approval of care professionals to companion

robots. We aimed to understand care professionals’ evaluations

and concerns about different forms of robots in daily care practice

as they are important stakeholders in the use of robots for older

adults who need care. Results showed that few concerns arise with

machines for companionship that satisfy the need for relatedness,

specifically among the lonely. Assistive and monitoring devices are

deemed potentially harmful, both physically and psychologically.

Our conclusion posits that specific robot technology may not

dehumanize care but could rather bring back meaningful

relationships into professional health care [27]. This knowledge

could be of help to contribute to a successful implementation

of robots in healthcare, serving the older adults best by taking

potential moral objections of the professionals into account during

early stages of development. Developers of healthcare robots

should tailor designs to the wants of older adults as well as to the

needs of our much-undervalued eldercare professionals so they

can serve older adults the best they can with the help of new robot

colleagues [28].

Acknowledgement

This study is part of the SELEMCA project (Services of Electromechanical Care Agencies grant number: NWO 646.000.003), which was funded within the Creative Industry Scientific Programme (CRISP). CRISP was supported by the Dutch Ministry of Education, Culture and Science. We are grateful to the participants in this study for their precious time, thoughts, and feelings. The contribution of the first author was fully funded by Inholland, University of Applied Sciences. We would like to thank the Medical Technology Research Group of Inholland for proof-reading earlier drafts. We would also like to thank the three coders (Wendy Bruul, Josien van Dijkhuizen, and Chanika Mascini) and Maartje Verheggen for input on the qualitative aspects of this study. We further acknowledge the researchers of the SELEMCA group for their critical and constructive remarks on earlier draft versions of this paper. The authors declare that there is no conflict of interest. We applied the VU University’s “code of ethics for research in the social and behavioral sciences involving human participants” in conducting our study. Our methods have addressed all ethical considerations and is in compliance with this guideline.

References

- https://ec.europa.eu/eurostat/web/products-eurostat-news/-/DDN-20180508-1

- https://ec.europa.eu/health/sites/health/files/state/docs/chp_nl_english.pdf

- Smarr C, Prakash A, Beer J, Mitzner T, Kemp C, et al. (2012) Older adults’ preferences for and acceptance of robot assistance for everyday living tasks. Proceedings of the Human Factors and Ergonomics Society Annual Meeting 56(1): 153-157.

- ActiZ (2018) ActiZ calls on ministers to organize acute care for vulnerable elderly people differently.

- Koster DY (2012) Doubts about the use of unemployed people in home care. Domestic Administration.

- Loos HV, Reinskensmeyer D, Guglielmelli E (2016) Rehabilitation and health care robotics. Springer Handbook of Robotics. Springer, Germany, pp. 1685-1728.

- Wood C (2017) Barriers to innovation diffusion for social robotics start-ups: And methods of crossing the chasm.

- Malehorn K, Liu W, Hosung I, Bzura C, Padir T, et al. (2012) The emerging role of robotics in home health. Proceedings of the Eighteenth Americas Conference on Information Systems.

- Wijnsberghe A (2012) Designing Robots for care: Care centered value-sensitive design. Science and Engineering Ethics 19(2): 407-433.

- Archibald M, Barnard A (2018) Futurism in nursing: Technology, robotics and the fundamentals of care. Journal of Clinical Nursing (11-12): 2473-2480.

- Ienca M, Jotterand F, Vica C, Elger B (2016) Social and assistive robotics in dementia care: Ethical recommendations for research and practice. International Journal of Social Robotics 8(4): 565-573.

- Broadbent E (2017) Interactions with robots: The truths we reveal about ourselves. Annual Review of Psychology 68: 627-652.

- Sharkey A, Wood N (2014) The paro seal robot: Demeaning or enabling? Proceedings of AISB 2014, London.

- Kaneko Y (2013) Three utilitarians; Hume, bentham and mill. Journal of Ethics, Religion and Philosophy 24(3): 65-78.

- Karimi A (2012) Care robots are advancing. Metro.

- Beauchamp T, Childress J (2013) Principles of biomedical ethics. Oxford: Oxford University Press, UK.

- McCormick T (2013) Principles of bioethics. Ethics in Medicine. Washington: University of Washington, USA.

- Sharkey A, Sharkey N (2012) Granny and the robots: Ethical issues in robot care for the elderly. Ethics and Information Technology 14(1): 27-40.

- https://www.fgb.vu.nl/en/Images/ethiek-reglement-adh-landelijk-nov-2016_tcm264-810069.pdf

- Sorell T, Draper H (2014) Robot carers, ethics, and older people. Ethics and Information Technology 16: 183-195.

- Jenkins S, Draper H (2015) Care, monitoring, and companionship: Views on care robots from older people and their carers. International Journal of Social Robotics 7(5): 673-683.

- Broadbent E, Stafford R, MacDonald B (2009) Acceptance of healthcare robots for the older population: Review and future directions. International Journal of Social Robotics 1(4): 319-330.

- Racine L (2016) A critical analysis of the use of remote presence robots in nursing education 8(1).

- Turkle S (2017) Alone together: Why we expect more from technology and less from each other.

- Van Kemenade MA, Konijn E, Hoorn J (2015) Robots humanize care: Moral concerns versus witnessed benefits for the elderly. Proceedings of the 8th International Conference on Health Informatics (HEALTHINF) Scite Press, Portugal, pp. 464-469.

- Burger S (Director) (2015) Alice Cares [Motion Picture].

- Harrison N (2015) Caredroids in health care. The Lancet 386(9990): P235-236.

- Venkatesh V, Morris M, Davis F, Davis G (2003) User acceptance of information technology: Towards a unified view. MIS Quarterly 27(3): 425-478.

© 2021 Margo AM van Kemenade. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and build upon your work non-commercially.

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

.jpg)

Editorial Board Registrations

Editorial Board Registrations Submit your Article

Submit your Article Refer a Friend

Refer a Friend Advertise With Us

Advertise With Us

.jpg)

.jpg)

.bmp)

.jpg)

.png)

.jpg)

.jpg)

.png)

.png)

.png)