- Submissions

Full Text

COJ Electronics & Communications

Content-Based Natural Images Retrieval using Curvature Histogram Shape Feature

Farnaz Gholipour and Hossein Ebrahimnezhad*

Department of Electrical Engineering, Computer Vision Research Lab, Sahand University of Technology, Iran

*Corresponding author:Hossein Ebrahimnezhad, Department of Electrical Engineering, Computer Vision Research Lab, Sahand University of Technology, Iran

Submission: August 30, 2023;Published: September 14, 2023

ISSN 2640-9739Volume2 Issue5

Abstract

Content-Based Image Retrieval (CBIR) is a technique of recovering images from a database. In CBIR, a user identifies a query image and acquires the images in the dataset like to the query image. To find the most similar images, CBIR matches the content of the input image to the dataset images. In this paper, a new method has been presented for indexing and retrieving by means of shape feature. In feature extraction stage, which we named curvature histogram, first the explanation of shape is obtained using nonlinear diffusion filter. Then, the shape curves’ curvature is calculated. These curvatures produce a histogram which can be used as a feature. Image retrieval using this method improves the results on Corel database considerably.

Keywords:Content-based image retrieval; Shape feature; Curvature histogram

Introduction

Developing new technologies, users could save and retrieve images efficiently. Taking digital images easily and making databases are the reason for the increasing number of images in image databases and the World Wide Web. Digital images are the fundamental part of our daily communications, but humans are not able to manage the large number of them. For example, a person who is searching for an image in a hundred images of a database may find the image he wants fast and just by looking at database images. But when the number of images is huge, this will be very difficult and time-consuming. This is the best time to use an image retrieval system. There are two methods to search images in image databases: textual based and content-based methods. In text-based image retrieval systems, first images are annotated, and then images are searched using their textual labels. This method is not appropriate for retrieval. On the other hand, annotating performed by operator and more over time consuming, it is possible that operator make a mistake in annotation and words for description of the images have wrong dictation. On the other hand, words cannot describe images content. An image is worth more than one thousand words. Because of meaningfulness of images and innateness of human perception, any textual descriptor cannot be complete and accurate 100 percent [1]. To overcome these problems, Content Based Image Retrieval (CBIR) is used. In this method, visual content indexing is used instead of manual indexing. Storing and retrieving images are performed by extracting features such as color, texture, shape and location, automatically.

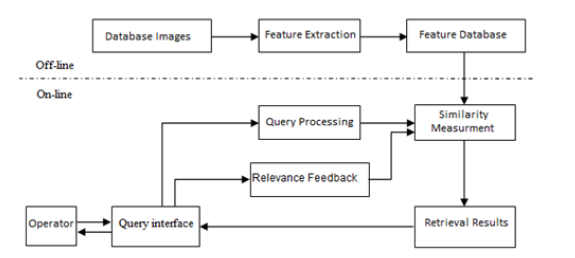

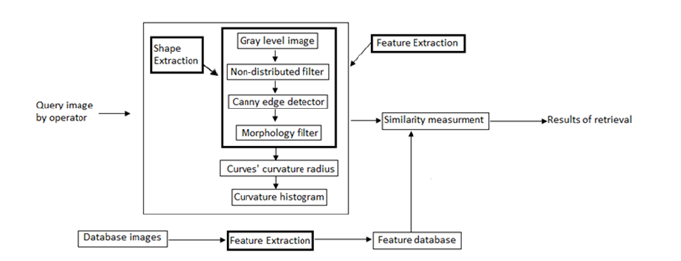

Image retrieval system is the process for finding similar images to the searching image in database. Typically, this process is performed in two phases: indexing and searching. In indexing phase, each image in database is described by a set of image features such as color, shape, texture and location. Extracted features for all of images are saved. In the searching phase, when the operator gives an image as a searching image to the system, the feature vector for that image is calculated and this vector is compared with feature vectors of images in database using a similarity measure. Images with maximum similarity to searching image retrieve to operator [2]. A complete block diagram of an image retrieval system is shown in Figure 1. Extracted features of database images are saved in features database. Operator gives query image to the system. The query processing model extracts features of query image and similarity measurement model compares the feature vector of query image with database feature vectors and finds the most similar image to query image. Query interface is established for adding operator in image retrieval systems. This interface gets the required information from the operator and displays the results to him. In image retrieval systems, Relevance Feedback (RF) is used for entering operator to retrieval process which helps query image is more like the image operator intended. In most of the CBIR systems, color, texture, shape, edge and location features are used for indexing. These features are reputed as low-level features and they are used in most of the image retrieval systems.

Figure 1:Block diagram of image retrieval systems.

Color is one of the important features in human image understanding. Color histograms are used in image comparison and retrieval widely [3]. In Query by Image Content system (QBIC), color histogram is used in RGB space in 256 bins [4]. Image retrieval system in [2] used color histogram in HSV space in 54 bins for color description. Lin et al. [5], for image color feature extraction used k-means clustering algorithm firstly to cluster all image pixels to 16 clusters, then it uses histogram of these 16 colors for description of color features. In [6], color coherence vector has been used to extract color features in image retrieval system. In [7], dominant color descriptor has been used in quantized color space. Most used texture features in image retrieval systems are spectrum features such as feature which are extracted by Gabor filters [2,8], wavelet transformation [9,10], and statistical features such as Tamura texture features [11] and World features [12]. In [5], Color Co-Occurrence Matrix (CCM) and comparison between pixels intensities of matrix patterns have been used for texture feature description. In MARS system, texture has been described by toughness measuring histogram, image direction histogram and a quantity shows image contrast [13]. In [14], texture has been described by means of contrast in the regions [15].

In most cases, operators are more interested in image shape than color and texture features. In addition, shape features extraction is difficult in comparison with other image features [16]. Shape descriptor is recognized as contour-based or region-based methods. Boundary or contour-based method shows the shape using its outside lines. However, in region-based method shape is considered as a mixture of regions set. It is not easy to select a set of features which can describe an object well. Most of these technics are not suitable in image retrieval systems because either they are complex or they are meaningless in human vision system. Therefore, simple methods and visual meaningful shape features are used in some image retrieval systems. QBIC system considered shape region, circulatory, direction and algebraic momentums as shape feature descriptors [4]. MARS retrieval system used boundary which is extracted by Fourier descriptor [13]. In [17], edge directions correlation is used for shape description. Zernike moments are used in [18,19]. Lee et al. [19] has used Hu moment invariants. Park et al. [20] has used both Hu moment invariants and Fourier descriptor to feature extraction. In [21], Fourier descriptor and edge histogram are used. To find images with similar visual content to a given query image, CBIR is utilized to search through an image database [22].

This process is entirely automated. However, it suffers from “semantic gap,” which is the disconnect between the high-level concepts present in the images and the low-level attributes that describe the images [23]. This results in the retrieval of irrelevant images. This gap has been the subject of various research over the last three decades [24]. To translate high-level concepts in images to features, numerous techniques have been developed. The core of CBIR is built on these characteristics. Depending on the feature extraction techniques, features are typically divided into global features and local features. A representation of the image is provided by global features, such as color, texture, shape and spatial information. They benefit from being quicker at similarity calculations and feature extraction. However, they are unable to distinguish between the background and the object. They are therefore inappropriate for object recognition or retrieval in complicated settings, while they are suitable for object categorization and detection [25]. The fuzzy Class Membership- Based Retrieval (CMR) framework is used by Kale et al. [25] to improve the retrieval performance of the Content-Based Image Retrieval (CBIR) system [26]. Hong et al. [26] suggest a supervised image retrieval technique called Sparse Discriminant Analysis for Image Retrieval (SDAIR) to address the semantic gap between lowlevel characteristics and high-level [27]. In this study, image shape curvature histogram is used as shape feature. First, the shape of the image is extracted, then curvature histogram is supplied from curves which are formed the shape and is used as the image features to image retrieval. In section 2, the proposed curvature histogram will be described. Experiments and results are surveyed in section 3. The result of the paper will be presented in section 4.

Curvature histogram

Curvature histogram describes curves curvature distribution which formed the shape of the image.

Image filtering:First gray level image of the RGB image is obtained. Then, the image is filtered using a nonlinear diffusion method. Nonlinear diffusion is a method to simplify and reduce noise reduction of the image. This method was invented by Perona et al. [28] for edge detection. Gerig et al. [29] developed other versions of this method for imaging such as texture and color images. The fundamental meaning of nonlinear diffusion is emanated from heat transformation mechanic. When two different metals with different temperatures joint together, the heat transformation obeys an equation named diffusion equation. If this equation is performed on the texture image pixels, assuming that intensities of pixels are equal to temperature of points on the metals, image intensities combine together so that pixel’s intensities (like temperature in metals) reach a balance.

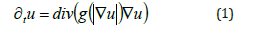

Distribution equation for a gray level image is [28]:

Where u(t=0), and I and g are the input image and descending Gradian function, respectively. The other version of this equation, has been proposed for vector images [29,30],

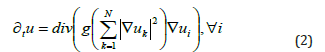

In this equation ui is one of the N channels which are in input image. After solving this equation, feature space is created to describe the shape of the image. As mentioned before, g is the descending Gradient function of the input image.

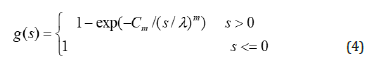

Where ε is used to prevent zero denominator. The g function which has been used in this paper is:

In this equations Cm constant is used flow of s*g(s) is ascending for g<λ and descending for g≥λ. Also, λ is a parameter for control of image resolution. In comparison with equation (Number of Equation!!), the recent equation is more flexible and has more free parameters.

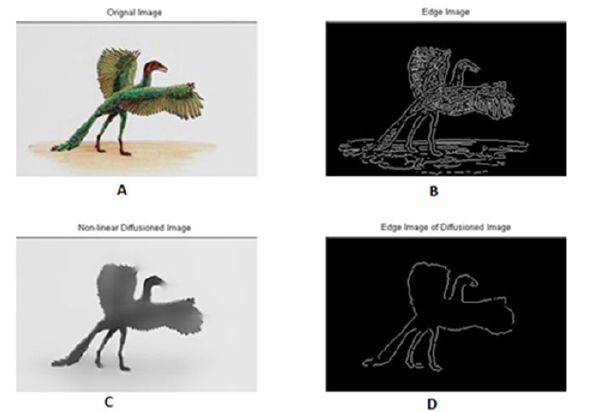

Edge detection: Canny edge detection operator is performed to the image and obtain the edges. As seen in Figure 2, edges can be seen better by using nonlinear distribution filter.

Figure 2:A. Original image; filtered image by B. Canny operator. C. nonlinear diffused filter. D. edge extracted from image C.

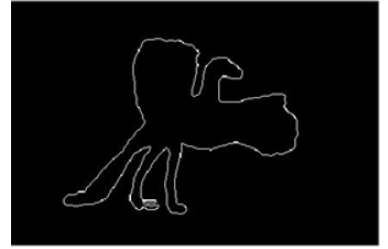

Shape extraction: Obtained edge image from diffused image is dilated. Therefore, the gap between near edge curves fills and the shape of the image extracts (Figure 3).

Figure 3:Shape extracted from image.

After image shape extraction, circles’ radius of shape curvature

curves is computed for shape feature extraction. Curvature circle is

the circle which is tangent to curves in direction of curvature and

radius of the circles is considered as radius curvature. Curve circle,

for P point, is the circle which [31],

a) In P point is tangent to the circle,

b) In P point has the same curvature which curve has,

c) It is in the direction to curvature or curve inside.

The center of curvature circle is named curvature center [32].

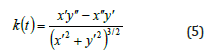

A curve is assumed as [x(t), y(t)]. Approximation of curvature of the point P0= [x(t0 ),y(t0)] of the curve is calculated using this equation [32]:

After calculating curves’ curvatures which formed the shape, their histogram is used as shape features (Figure 4). Since the curvature of horizon and vertical lines are zero and they have different description of the shape, they must be considered separately in histogram calculation. The block diagram of the method is illustrated in Figure 5.

Figure 4:Curvature circle [25].

Figure 5:Proposed method block-diagram.

Experiments and Result

In this paper the query by example method has been used. In this method, the operator doesn’t have any special purpose in his mind. He selects an image and inquires the similar images. When the operator gives an image to the retrieval system, the curvature histogram of the image is calculated and displays the most similar image to query image.

Database

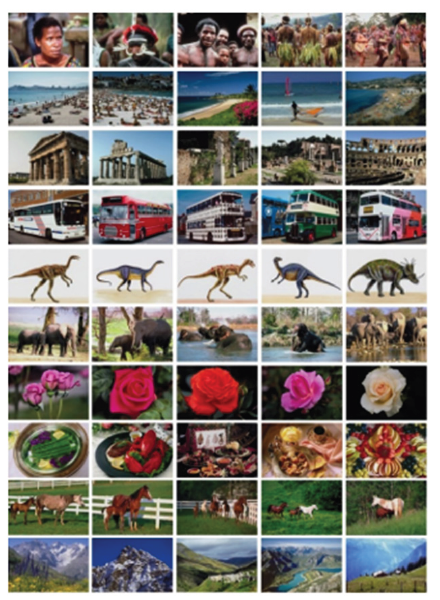

Selecting a proper database is an important step in the designing of image retrieval system [33]. There is no standard database and performance evaluation model for CBIR systems [34]. There is a large difference between image classification and image retrieval. In image classification, there is a train database and the purpose is to identify the class of the query image. While in image retrieval, there is no train database and the purpose is to find images which are like the query image. Mostly Database used for image classification is not proper for image retrieval and vice versa. More than half of the systems to evaluate performance of the retrieval systems use subset of Corel images [34]. This database is appropriate because of the large number of images and wide variety of content. Wang database is used in this paper. This database is a subset of Corel and its images are selected manually from Corel such that there are 10 classes with 100 images in each class. The size of the images is 256x384 and 384x256 pixels in jpeg format. Figure 6 illustrates a sample image of the database. Images in each row are in the same class.

Figure 6:Some sample images of Wang database; images in each row belong to a same group.

Similarity measure

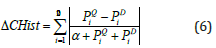

20-dimensions feature vector is calculated for each of the database images. Feature vector of the images and query image are shown PD={P1D,P2D,....,P20D} and PQ={P1Q,P2Q,....,P20Q} respectively. The distance between them is

Where α is equal to 1. This comparison criterion is simple and suitable for large databases.

Experiments and system efficiency

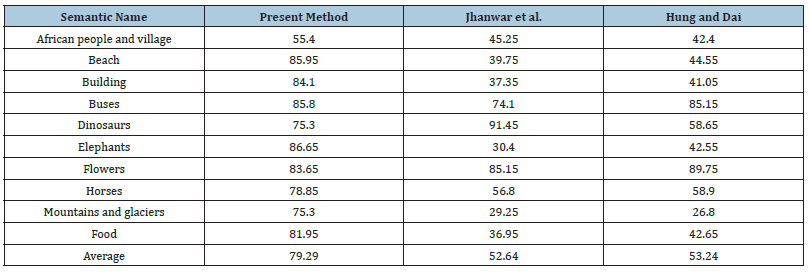

Extracting features from natural images is very difficult and there is no efficient algorithm to extract the shape of the images properly. The shape extraction method, which is explained in section 2, gives a description of image shape. For extraction of curvature histogram feature, after obtaining shape of the image, the curve curvature histograms, which form the shape are extracted. Table 1 shows the recycling results of applying the curvature histogram to the image.

Table 1:Precision percentage of the retrieval system for k=20.

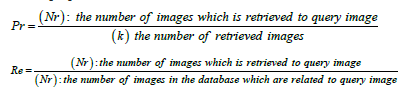

In this research for evaluating image retrieval system, precision (Pr) and retrieval (Re) are used. These two criteria are defined as below [28]:

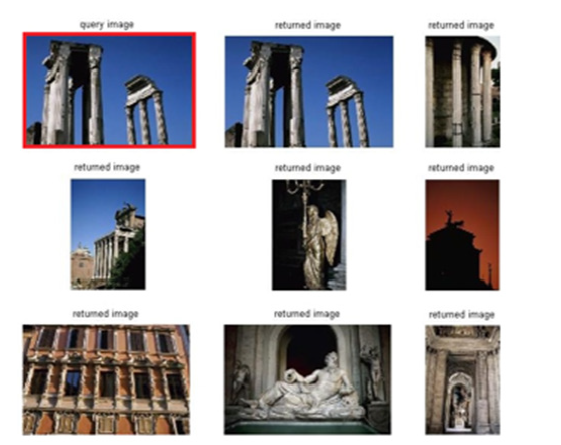

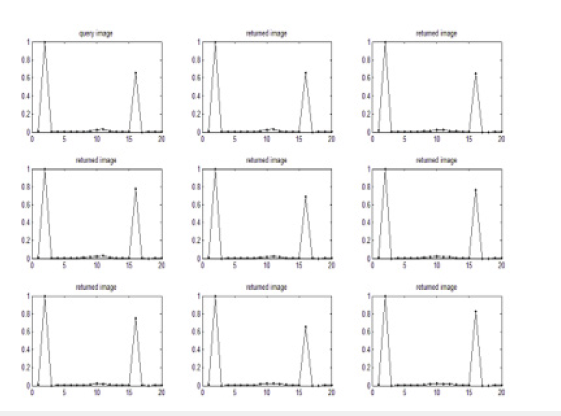

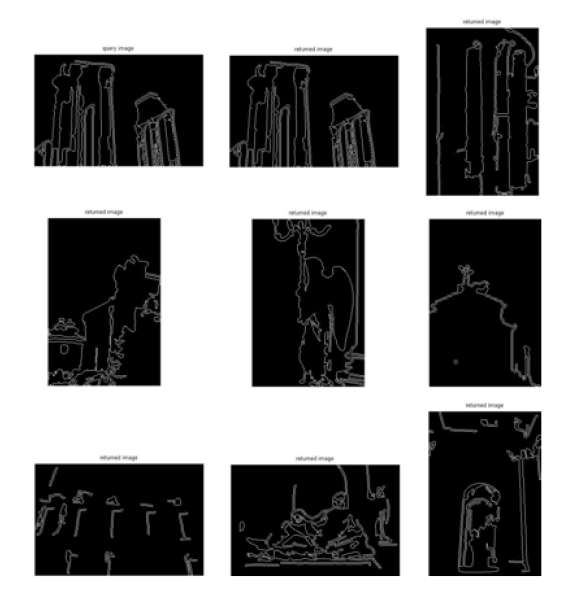

The result of image retrieval for a building image is shown in Figure 7. The graph of image features is illustrated in Figure 8. Figure 9 shows a description of the image shape of Figure 7. As seen in these images the number of vertical and horizontal lines is more than curves. Therefore, using this property of images, image retrieval systems can provide better results.

Figure 7:An example of image retrieval for the image group of “building”; Top left image is the query image and others are retrieval images.

Figure 8:Curvature histogram feature graph for the images of figure 7.

Figure 9:Shape description of images in figure 7.

Conclusion

In this paper, a new method was presented for shape feature extraction, which is named curvature histogram. First, the color image was converted to gray level image. Then, the image was smoothed using nonlinear diffusion method. After that, the shape of the image was extracted and curvature histogram was supplied from curves which formed the shape and is used as the image features to image retrieval. Curvature histogram describes a distribution of curves’ curvature which formed the shape. The use of the shape feature makes it possible to recover similar objects in terms of geometrical shape well, while two objects may have different color and texture information. The experiments are performed on Wang database and are compared with Hung et al. [26] and Jhanwar methods. The results show that the proposed method increases the efficiency of the image retrieval system, meaningfully. In the future works, we intend to apply other geometric features that are robust to non-rigid shape variations.

References

- Siggelkow S (2002) Feature histograms for content-based image retrieval. Freiburg University Library, Germany.

- Nezamabadi Pour H, Kabir E (2009) Concept learning by fuzzy k-NN classification and relevance feedback for efficient image retrieval. Expert Systems with Applications 36(3): 5948-5954.

- Pass G, Zabih R, Miller J (1997) Comparing images using color coherence vectors, pp. 65-73.

- Flickner M, Sawhney V, Niblack W, Ashley J, Huang Q (1995) Query by image and video content: The QBIC system. Computer 28: 23-32.

- Lin CH, Chen RT, Chan YK (2009) A smart content-based image retrieval system based on color and texture features. Image and Vision Computing 27(6): 658-665.

- Pass G, Zabih R (1996) Histogram refinement for content-based image retrieval, pp. 96-102.

- Wang XY, Yu YJ, Yang HY (2010) An effective image retrieval scheme using color, texture and shape features. Computer Standards & Interfaces 33(1): 59-68.

- Ma WY, Manjunath BS (1999) Netra: A toolbox for navigating large image databases. Multimedia Systems 7: 184-198.

- ElAlami M (2011) A novel image retrieval model based on the most relevant features. Knowledge-Based Systems 24: 23-32.

- Wang JZ, Li J, Wiederhold G (2001) Simplicity: Semantics-sensitive integrated matching for picture libraries. IEEE Transactions on Pattern Analysis and Machine Intelligence 23(9): 947-963.

- Tamura H, Mori S, Yamawaki T (1978) Textural features corresponding to visual perception. IEEE Transactions on Systems, Man, and Cybernetics 8(6): 460-473.

- Liu F, Picard RW (1996) Periodicity, directionality, and randomness: World features for image modeling and retrieval, IEEE Transactions on Pattern Analysis and Machine Intelligence 18(7): 722-733.

- Huang TS, Mehrotra S, Ramchandran K (1996) Multimedia Analysis and Retrieval System (MARS) project, pp. 260-265.

- Carson C, Belongie S, Greenspan H, Malik J (2002) Blob world: Image segmentation using expectation-maximization and its application to image querying. IEEE Transactions on Pattern Analysis and Machine Intelligence 24(8): 1026-1038.

- Takala V, Ahonen T, Pietikäinen M (2005) Block-based methods for image retrieval using local binary patterns. Image Analysis, pp. 882-891.

- El-ghazal AS (2009) Multi-technique fusion for shape-based image retrieval. University of Waterloo, Canada.

- Mahmoudi F, Shanbehzadeh J, Eftekhari-Moghadam AM, Soltanian-Zadeh H (2003) Image retrieval based on shape similarity by edge orientation auto correlogram. Pattern Recognition 36(8): 1725-1736.

- Chen X, Ahmad I (2007) Shape-based image retrieval using k-means clustering and neural networks. Advances in Image and Video Technology, pp. 893-904.

- Lee XF, Yin Q (2009) Combining color and shape features for image retrieval. Universal Access in Human-Computer Interaction. Applications and Services, pp. 569-576.

- Park JS, Kim TY (2005) Shape-based image retrieval using invariant features. Advances in Multimedia Information Processing-PCM, pp. 146-153.

- Ali F, Hashem A (2020) Content Based Image Retrieval (CBIR) by statistical methods. Baghdad Science Journal 17(2): 694-700.

- Bai C, Chen J, Huang L, Kpalma K, Chen S (2018) Saliency-based multi-feature modeling for semantic image retrieval. Journal of Visual Communication and Image Representation 50: 199-204.

- Shrivastava N, Tyagi V (2017) Corrigendum to content based image retrieval based on relative locations of multiple regions of interest using selective regions matching. Information Sciences 421: 273.

- Ghrabat MJJ, Ma G, Maolood IY, Alresheedi SS, Abduljabbar ZA (2019) An effective image retrieval based on optimized genetic algorithm utilized a novel SVM-based convolutional neural network classifier. Human-centric Computing and Information Sciences 9: 31.

- Kale M, Dash J, Mukhopadhyay S (2022) Efficient image retrieval system for textural images using fuzzy class membership. Multimedia Tools and Applications 81: 37263-37297.

- Hong SA, Huu QN, Viet DC (2023) Improving image retrieval effectiveness via sparse discriminant analysis. Multimedia Tools and Applications 82: 30807-30830.

- Brandt S, Laaksonen J, Oja E (2000) Statistical shape features in content-based image retrieval, Proceedings 15th International Conference on Pattern Recognition, Spain, pp. 1062-1065.

- Perona P, Malik J (1990) Scale-space and edge detection using anisotropic diffusion. IEEE Transactions on Pattern Analysis and Machine Intelligence 12(7): 629-639.

- Gerig G, Kubler O, Kikinis R, Jolesz FA (1992) Nonlinear anisotropic filtering of MRI data. IEEE Trans Med Imaging 11(2): 221-232.

- Rousson M, Brox T, Deriche R (2003) Active unsupervised texture segmentation on a diffusion-based feature space 2: 699-704.

- Belyaev A (2004) Plane and space curves. curvature. Curvature-based features. Max Planck Institute for Computer Science, Germany.

- Nezamabadi-Pour H, Kabir E (2004) Image retrieval using histograms of uni-color and bi-color blocks and directional changes in intensity gradient. Pattern Recognition Letters 25(14): 1547-1557.

- Liu Y, Zhang D, Lu G, Ma WY (2007) A survey of content-based image retrieval with high-level semantics. Pattern Recognition 40: 262-282.

- Deselaers T, Ney IH, Seidl T (2003) Features for image retrieval: Master's thesis Aachen: Human Language Technology and Pattern Recognition Group, RWTH Aachen University, Germany.

© 2023 Hossein Ebrahimnezhad. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and build upon your work non-commercially.

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

.jpg)

Editorial Board Registrations

Editorial Board Registrations Submit your Article

Submit your Article Refer a Friend

Refer a Friend Advertise With Us

Advertise With Us

.jpg)

.jpg)

.bmp)

.jpg)

.png)

.jpg)

.jpg)

.png)

.png)

.png)