- Submissions

Full Text

Significances of Bioengineering & Biosciences

The Socio-Ethical Dynamics of Artificial Intelligence in Healthcare

Ronith Lahoti*

Indus International School Pune, India

*Corresponding author:Ronith Lahoti, Indus International School Pune, India

Submission: March 15, 2024; Published: April 08, 2024

ISSN 2637-8078Volume7 Issue1

Abstract

The study expands from large language models to cutting-edge quantum Artificial Intelligence (AI), highlighting the transformative potential of AI in the healthcare industry. Despite the promising benefits of the technology, there are some socio-ethical issues that arise such as data privacy concerns in healthcare and the possibility of employing AI-driven protein engineering for military usage. This study highlights the need for strict regulations, ethical concerns and global collaboration to ensure responsible AI development. Most notably, the study understands and reflects upon the European Union’s Artificial Intelligence Act as an illustrative case of regional approaches to balancing innovation with societal well-being. While Artificial Intelligence’s presence continues spreading through the world across the healthcare industry, this article aims to promote a unified relationship where moral principles and social values lead the path of AI advances.

Keywords:Artificial Intelligence; Bioethics; Healthcare; Machine Learning; Medical Diagnostics; Ethics

Abbreviations:AI: Artificial Intelligence; LLM: Large Language Model; GPT-4: Generative Pre-trained Transformer 4; QAI: Quantum AI; GAI: General AI; HER: Electronic Health Record; ML: Machine Learning; BLM: Bipartite Local Models; BTWC: Biological and Toxin Weapons Convention; CWC: Chemical Weapons Convention.

Introduction

The rapid advancement of computer science and ultra-fast computing speeds are ushering in a new era for Artificial Intelligence (AI). AI is already demonstrably benefiting society in numerous ways, from weather forecasting and facial recognition to fraud detection and deciphering complex genomes [1]. However, the future role of AI in medical practice remains a topic of ongoing exploration and discussion. This surge in AI development presents both exciting possibilities and important considerations for the healthcare industry. When applying AI approaches to healthcare data, it is important to carefully consider the interaction of factors such as data governance, cleaning, management, analysis and organizational rules [2]. This paper will delve into examples of the types of AI revolutionizing healthcare delivery from production to service, focusing on its societal and ethical considerations when being applied in the context of everyday life. Fortunately, the presence of active policies or governing organizations can potentially help in revolutionalsing this field while also harnessing the power of AI, particularly ML, therefore helping healthcare practitioners to be empowered to focus on the root causes of an illness and whilst AI monitors the success of preventive measures, diagnosis and medication, ultimately improving the patient outcomes [3].

Growth of AI Healthcare

AI has blown like wildfire, spreading and consuming the traditional boundaries of work. In today’s day and age, big data and machine learning have become indispensable in different aspects of our life, including entertainment, business and of course, most importantly medicine. Big data and machine learning are the driving forces for generative AI growth [4]. A dynamic market for generative AI worth USD 43.87 billion in 2023 is being led by the tech giants such as Google, IBM and Adobe. It is therefore a testament to tomorrow that it shall propel it from a billion dollar to trillion dollars colossus [5]. Artificial Intelligence encompasses a wide spectrum of possibilities. However, the recent advancement of AI into the healthcare domain seeks to instigate a much-needed revolution in the industry and redefine its possibilities. Over the past decade, the healthcare industry has widely adopted major applications of generative AI in the form of LLMs (large language models), such as GPT-4 and Bard. Their applications are manifold and include simplifying insurance pre-authorization, clinical documentation, drug design, protein engineering and more. Most commonly they function as bots who can address the concerns of patients about their personal health records. However, these LLMs should be handled with caution despite their great transformational potential. In this case, unregulated AI-based medical technologies approach training is not the same, particularly as it concerns critical patient care [6]. This provides room to the implementation of generative AI in other formats beyond the common and simple LLMs in medical technology.

Variety of Artificial Intelligence Technologies: Quantum AI and General AI

Cutting edge AI technologies, including Quantum AI (QAI)

are being introduced to expedite training and provide quick

diagnostic models. Quantum computers-based AIs possess

remarkable processing power allowing for real time analysis

of vast amounts of medical data to ensure accurate diagnoses.

Quantum optimization algorithms are used to optimize decision

making in medical diagnostics by considering medical history

and other pertinent factors. Another concept known as General

AI (GAI) is being employed by various projects and companies

such as OpenAI’s DeepQA, IBMs Watson and Google’s DeepMind.

GAI seeks to improve diagnosis precision, time management and

provision of supportive data to doctors. GAI can be the next stage

in revolutionizing medicine through detailed analysis of medical

records, hence leading to better patient outcomes and efficiency

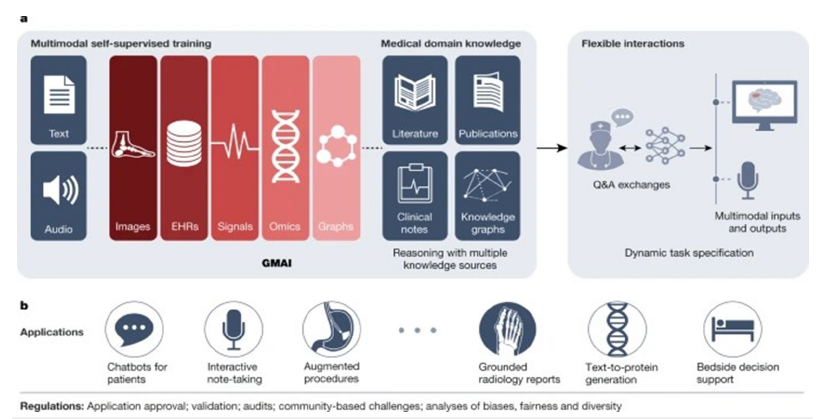

[7] (Figure 1). The following image above showcases a rudimentary

outline of how the system works. The image can be broken down

into two sections a. and b.

A. Section a: Showcases a GAI model, trained on diverse

medical data modalities using techniques like self-supervised

learning, facilitates flexible interactions by pairing modalities

like images or HER (Electronic Health Record) data with

language (text or speech). To perform medical reasoning tasks,

the GAI model accesses various medical knowledge sources,

enhancing its capabilities for downstream applications. Users

can specify real-time tasks and the model retrieves contextual

information from sources like knowledge graphs or databases,

leveraging formal medical knowledge for reasoning about novel

tasks.

B. Section b: The GAI model serves as a foundational tool

for diverse applications in clinical disciplines. Each application

necessitates meticulous validation and regulatory assessment

to ensure its reliability and efficacy.

Figure 1:GAI in healthcare [7].

Social Risk Evaluation in the Healthcare

The development and implementation of such AIs in medical diagnostics encounter technical, regulatory and ethical challenges. A primary challenge is the availability of high-quality labeled data since medical data can be fragmented, incomplete, unlabeled or inaccessible altogether. If AI algorithms are trained on nonrepresentative data, there is a risk of bias leading to incorrect diagnoses. Ethical concerns arise with regards to using GAI as private and sensitive datasets need to be fed into the model in order to get results, encompassing issues like data privacy, algorithmic transparency and accountability. While solutions like federated learning have been proposed as possible remedies for these challenges; further investigation is needed to ensure their effectiveness in the field of medical research. Lastly, interoperability standards and protocols are crucial for facilitating effective collaboration between different entities that develop AI based medical diagnostic tools [8].

Ethical Consideration

Towards the more technical aspects of the domain such as drug discovery, Machine Learning (ML) stands as a formidable instrument for delineating the intricate interplay between physicochemical parameters and protein-ligand interactions, leveraging insights from established complex structures. Examples of these could be seen in diverse algorithms such as kernel regression techniques, BLM (bipartite local models) [9], and predictive approaches utilizing random forest [10] and/or restricted Boltzmann machines [11]. These algorithms are becoming the founding basis of protein design de novo and amino acid sequence prediction, encompassing chemical structure insights, protein sequence data and drug-target complex networks. However accurate predictions in these machine learning models require the inclusion of a wide range of physical and chemical properties, along with leveraging the most recent advancements in machine learning algorithms. Meticulous attention to dataset completeness is imperative, as imbalances can engender suboptimal, inaccurate or prejudiced predictions. A perpetually iterative loop of exchanging predictions with experimental data is essential for continuously improving and expanding these models.

Another area of concern within the realm of protein engineering pertains to the development of hybrid or fusion toxins for advanced therapeutic purposes. By employing various protein engineering techniques, there is a notable enhancement in the efficacy of fusion toxins in eradicating target cells, as demonstrated in a study by Kiyokawa et al. [12] showcasing a 17-fold increase in cytotoxicity [12]. While the majority of protein toxin research is conducted with peaceful intentions and shared through scientific publications, there exists a potential risk of misuse for the development of biological weapons. While most protein toxin research is conducted for peaceful purposes and shared in scientific publications, there is a risk of misuse for biological weapons.

Several documented cases highlight the acquisition of protein toxins for hostile activities. Over a decade ago, The World Armaments and Disarmament Yearbook emphasized military interest in the facile production of novel engineered bacterial toxins and similar substances through protein engineering facilitated by generative AI. Researchers engaged in protein toxins must remain cognizant of their legal and ethical responsibilities as outlined in the Biological and Toxin Weapons Convention (BTWC) and the Chemical Weapons Convention (CWC). It is vital for them to educate both themselves and the next generation of researchers about these obligations and to promptly report any suspicious activities that could potentially enhance toxin lethality or its military applicability through the utilization of accessible and replicable technologies such as AI [13].

Regulations and the Way Forward

Countries actively involved in protein toxin engineering should establish national biosecurity boards to oversee proposed experiments and manage AI databases. An example of this was the International Physicians for the Prevention of Nuclear War, which was assembled in 1980 and right after in the winter of 1985 won a Nobel Peace Prize for its principled, authoritative and evidencebased arguments about the threats of nuclear war. On a similar note, if something similar along the lines of AI could be assembled by leading tech companies, the integration of the technology would be much supported and entrusted by consumers, while also alarming, corrupted ventures from fouling up. Should artificial intelligence ever live up to its potential to benefit people and society, we will need to establish democracy, reinforce our public institutions and distribute power such that there are efficient checks and balances with regulatory bodies. This involves making sure that the military-corporate complex sectors pushing AI breakthroughs are transparent and accountable, just like social media businesses that allow AI-driven, targeted misinformation to threaten our democratic institutions and privacy rights [14]. Additionally, as seen with earlier technologies, avoiding a mutually destructive AI “arms race” and reaching a worldwide agreement is required to avert or minimize the hazards presented by AI. It will also call for decision-making that is shielded from the influence of corrupt parties with a stake in the outcome and clear conflicts of interest.

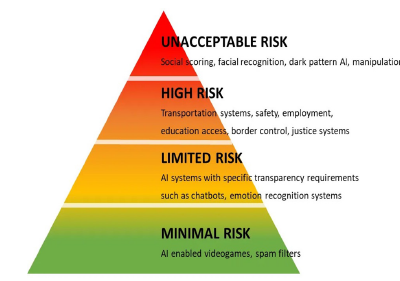

Present-Day Solution: AI Act

Currently, there is no definite policy that looks into the candid and regulated integration of AI into the healthcare industry. However, there are general policies being established to flag various start-ups and technologies. At a regional level, the European Union has an Artificial Intelligence Act [15] is a legal framework governing the use and sale of products or services with the integration of Artificial Intelligence, classifying systems into three categories: unacceptable-risk, high-risk and limited and minimal-risk. This Act is a clear sign of effort towards a mutual agreement on the threats of AI, potentially preventing unforeseeable catastrophes (Figure 2). The figure, showcases a simplified division of various AIs as per their applications. As seen, most LLMs present in medicinal AI would be included in the limited risk zone, however most analytical forms of AI that involve machine learning and developing patterns such those mentioned towards the lines of protein engineering could potentially be at a high to unacceptable risk, hence ultimately inhibiting the growth of the industry [16]. As of 2023, the act is still in the process of being completely implemented, but after that it would become an imperative aspect of AI development, especially healthcare. The Act is also set to have a non-compliance fee of over 30 million Euro or 6% of the annual worldwide turnover if that’s higher. Further, proving false or fabricated information of the AI by the company would also lead to a 2 million Euro fine or 2% of the annual worldwide turnover if that’s higher. From the greater retrospect, the implementation of AI and its aspects of generative technology and machine learning are intrinsic to development in the fields of healthcare, ultimately giving humanity the ability to control the mechanisms and rate of evolution. Accepting this truth also comes with accepting the various challenges it beholds, whether ethical, societal or technical. Hence, in order to harness the maximum capabilities of the technology we must be able to unite and solve towards controlling “the flames of this AI wildfire”..

Figure 2:Breakdown of the Act [16].

Conclusion

In conclusion, the integration of Artificial Intelligence (AI) within healthcare offers vast potential but also raises significant socio-ethical considerations. While advancements like quantum AI and general AI promise revolutionary diagnostic capabilities, concerns regarding data privacy and the potential for misuse necessitate stringent regulatory oversight. The European Union’s Artificial Intelligence Act exemplifies efforts to strike a balance between fostering innovation and safeguarding societal interests. Moving forward, a collaborative approach, bolstered by robust ethical guidelines, is essential to ensure that AI-driven healthcare innovations benefit humanity responsibly and ethically. Looking ahead, it is imperative for policymakers, regulatory bodies and stakeholders to collaborate in establishing comprehensive frameworks that prioritize ethical principles and social values in AI development. This involves fostering transparency, accountability and democratic oversight to ensure that AI technologies serve the collective interests of humanity while mitigating potential risks. Ultimately, by navigating these socio-ethical dynamics effectively, we can harness the full potential of AI to revolutionize healthcare while upholding moral and ethical standards.

References

- Pretty J, Tritton C, Gavin M (2019) AI for good? A systematic review of the ethical implications of artificial intelligence in healthcare. Health Policy 23(4): 405-416.

- Vayena E, Blasco J, Lopez ML (2022) A roadmap for integrating bioethics into the design, development and deployment of artificial intelligence in healthcare. NPJ Digital Medicine 5(1): 1-9.

- Gundersen L, Aasebø E, Ødegård Ø (2023) Artificial intelligence in healthcare: A systematic review of patient perspectives. International Journal of Medical Informatics 170: 104436.

- Lahoti R (2023) Charting new horizons: The expanding role of ai in healthcare and protein engineering. Cohesive J Microbiol Infect Dis 7(1): 1-5.

- (2023) Generative AI market size, share & covid-19 impact analysis, by model (generative adversarial networks or GANs and transformer-based models), by industry vs application and regional forecast, 2024-2032. Fortune Business Insights.

- Meskó B, Topol EJ (2023) The imperative for regulatory oversight of large language models (or generative AI) in healthcare. NPJ Digit Med 6(1): 120.

- Moor M, Banerjee O, Abad ZS, Krumholz HM, Leskovec J, et al. (2023) Foundation models for generalist medical artificial intelligence. Nature 616(7956): 259-265.

- Al-Antari MA (2023) Artificial intelligence for medical diagnostics-existing and future ai technology! Diagnostics 13(4): 688.

- Bleakley K, Yamanishi Y (2009) Supervised prediction of drug-target interactions using bipartite local models. Bioinformatics 25(18): 2397-2403.

- Mihai DP, Trif C, Stancov G, Radulescu D, Nitulescu GM (2020) Artificial intelligence algorithms for discovering new active compounds targeting TRPA1 pain receptors. AI 1(2): 276-285.

- Wang Y, Zeng J (2013) Predicting drug-target interactions using restricted Boltzmann machines. Bioinformatics 29(13): 126-134.

- Kiyokawa T, Williams DP, Snider CE, Strom TB, Murphy JR (1991) Protein engineering of diphtheria-toxin-related interleukin-2 fusion toxins to increase cytotoxic potency for high-affinity IL-2-receptor-bearing target cells. Protein Eng 4(4): 463-468.

- Tucker JB, Hooper C (2006) Protein engineering: Security implications. The increasing ability to manipulate protein toxins for hostile purposes has prompted calls for regulation. EMBO Rep 7 Spec No (Spec No): S14-S17.

- Federspiel F, Mitchell R, Asokan A, Umana C, McCoy D (2023) Threats by artificial intelligence to human health and human existence. BMJ Glob Health 8(5): e010435.

- (2021) European Union. A European approach to artificial intelligence.

- Witzel L (2022) 5 things you must know now about the coming EU AI regulation.

© 2024 Ronith Lahoti, This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and build upon your work non-commercially.

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

.jpg)

Editorial Board Registrations

Editorial Board Registrations Submit your Article

Submit your Article Refer a Friend

Refer a Friend Advertise With Us

Advertise With Us

.jpg)

.jpg)

.bmp)

.jpg)

.png)

.jpg)

.png)

.png)

.png)