- Submissions

Full Text

Research in Medical & Engineering Sciences

An Optimized Algorithm Regarding Extraction of Specification of Brain Electrical Signal in BCI in Order to Diagnosis Epidemic

Maryam Sajedi*

Islamic Azad University Damavand, Iran

*Corresponding author: Maryam Sajedi, Islamic Azad University Damavand, Iran

Submission: January 20, 2018; Published: February 06, 2018

ISSN: 2576-8816Volume3 Issue3

Abstract

Electro Encephalogram (EEG) is a technique to record electro activity of human brain. The most common way is using an electrode attached to scalp which can facilitate applying non invasive technique for EEG.P300 is one of the most studies using Event Related Potentials (ERP) in Brain Computer Interfaces (BCI). EEG raw data are noisy which make the p300 wave as accurate as possible by using appropriate feature extraction. The mentioned method combined with powerful classifier. P300 speller is one of the important BCI application that allows the selection of Characters on virtual keyboard by analyzing recorded electroencephalography activity. On the other hand, in recent days there have been done a lot of research in field of utilizing brain activity to interact with the external environment as a defined procedure named (BCIs). This paper proposes a novel method to reduce feature dimension by using Kernel Principal Components Analysis (KPCA) which has a significant and considerable advantage over other methods, such as Principal Component Analysis (PCA). This approach enables us to have possibility of reduction of feature dimension in non-linear systems and it will result in promising outcomes such as increase precision and also Signal-to Noise Ratio (SNR) will be enhanced. This method can detect P300 signals feature with high level of accuracy. This technique makes use of wavelet coefficients to reduce feature dimension. Support vector machine (SVM) was used as classifier The proposed method has achieved accuracy of 98.51% for subject A and 95.12% for subject B, thus it could be assumed as the proposed method could yield a high degree of accuracy. So our analysis demonstrates that the proposed approach achieves better detection accuracy compared to traditional methods including canonical correlation analysis and its variants. Index Terms- The most widely used feature extraction algorithms, Brain-computer interface BCI, Brain Electrical Signals, EEG, KPCA.

Introduction

Figure 1: Functional model of a BCI system.

Figure 1 shows the functional model of a BCI system [1]. The figure depicts a generic BCI system in which a person controls a device in an operating environment (e.g., a powered wheelchair in a house) through a series of functional components. In this context, the user's brain activity is used to generate the control signals that operate the BCI system. The user monitors the state of the device to determine the result of his/her control efforts. In some systems, the user may also be presented with a control display, which displays the control signals generated by the BCI system from his/her brain activity [1,2].

The electrodes placed on the head of the user record the brain signal from the scalp, or the surface of the brain, or from the neural activity within the brain, and convert this brain activity to electrical signals. The 'artefact processor' block shown in Figure 1 removes the artefacts from the electrical signal after it has been amplified. Note that many transducer designs do not include artefact processing. The 'feature generator' block transforms the resultant signals into feature values that correspond to the underlying neurological mechanism employed by the user for control. For example, if the user is to control the power of his/her mu (8-12Hz) and beta (13- 30Hz) rhythms, the feature generator would continually generate features relating to the power spectral estimates of the user's mu and beta rhythms [3-8].

The feature generator generally can be a concatenation of three components-the 'signal enhancement', the 'feature extraction' and the 'feature selection/dimensionality reduction' components, as shown in Figure 1.

In some BCI designs, pre-processing is performed on the brain signal prior to the extraction of features so as to increase the signal- to-noise ratio of the signal. In this paper, we use the term 'signal enhancement' to refer to the pre-processing stage.

A feature selection/dimensionality reduction compo-nent is sometimes added to the BCI system after the feature extraction stage. The aim of this component is to reduce the number of features and/or channels used so that very high dimensional and noisy data are excluded. Ideally, the features that are meaningful or useful in the classification stage are identified and chosen, while others (including outliers and artefacts) are omitted [3].

In recent years, a lot of studies have been done around Brain- Computer Interface (BCI) and control interface. BCI is a technique enabling us to find a way to transfer the commands from human brain to computer and one of the promising outcomes of mentioned procedure is allowing disabled people to interact with their environment such as healthy people [1].

To aim the alluded goal, we have to use special instrument which can help us to record the signals of brain. The set up that make mentioned aim possible including the hardware and software tools. On the other hand, another important advantage of this technique is because of not requiring surgical operation to implement electrodes into the brain and this simple setup do its job out of body around the head.

To pick up effectively in order to be executed, the commends which are issued by brain must be classified. Steady-State Visual Evoked Potential (SSVEP) is a brain signal modality and it is based on the reaction of brain to image/light and flashing targets from which the user attempts to select one target. This signal elicited in the brain that has the same frequency as that of the flashing target and has been widely used in BCI applications.

But Signal P300, has been proven to be one of the most popular in the design of many BCI devices, such as lie detector, fingerprint, smart home and so on. The P300 is a positive deviation that shows up to 300MS of its effect in pulses on the brain signal in the time domain. In current work we have tried to use the enhanced form of Principal Component Analysis called Kernel Principal Component Analysis (KPCA) in the analysis of the spectral features of EEG signals for high accuracy P300 recognition. The proposed approach takes advantage of the spectral characteristics of each EEG channel and the overall characteristics of all channels combined during classification, which makes the method adaptable to per-subject variability. What is novel about the method proposed in this paper is that it computes significant features of each channel and then aggregates such features using a KPCA-based approach. It also adapts the combination of KPCA and Linear Discriminant Analysis (LDA) of the spectral features of EEG signals into the SSVEP detection domain [9-13].

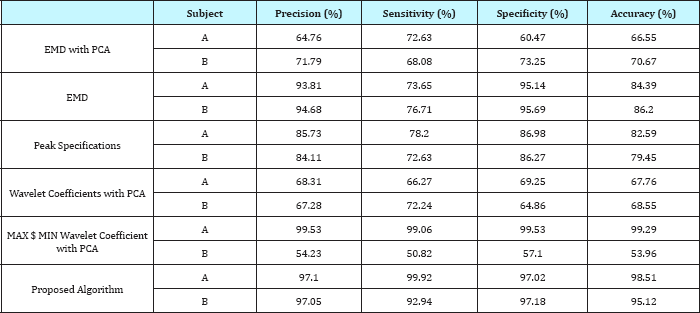

Table 1: Performance comparison of different feature extraction techniques.

To increase system speed and the performance of Real-time processes require features with minimal feature dimensions. Studies have shown that when we use a large number of features, to reduce the dim-ensions of the feature, the accuracy of the PCA algorithm will increase It has also been shown that the reduction of the dimensional Feature Vector has a significant impact on the accuracy of the classification. Since the proposed method uses the KPCA, it offers accurate results in those cases when we have large number of features and that is why the results gives higher level of precision compared to PCA. In Table 1, gives results for comparison.

The Principal Component Analysis (PCA) is a well-known statistical method to extract features and also reducing dimensions. The PCA compresses the data by reducing the dimensions of the feature, while during the process retains the information [14,15].

In this paper, the KERNEL PCA method is used to reduce the dimensions of the feature. In this way, an optimal algorithm (compared to previous work around this field of research) is presented. The KPCA method is a technique allowing us to have the chance of probable reduction of dimensions with a little modification in PCA method to reduce the dimensionality in nonlinear classification, and also when given the complexity of the data, it can provide a very high degree of accuracy [16,17].

In this section, we intend to study a comprehensive study of research and examine the volume of those samples that we will deal with them, and also the different method for better and more efficient calculation and the causes and factors affecting them will be investigated with special precision and We will also look at some of the input and output metrics and analyzing them will be studied as well [18,19].

For example, X= (x1, x2, .... xp) x1 is the feature of weight and x2 is the density future, and so on. The problem is that the issues are not always composed of a number of finite 3 or 5 features, and in many classification cases we face with a status, that the value of a P is very large. There are several reasons for not using many of these features for classification, and definitely there are a few number of algorithms to reduce the dimensions and results in promising outcome [20].

The main reason has been reviewed and categorized it as follows:

a) Picking phenomenon: Based on the nfeature, the number of designed classifier errors will decrease with increase of the classification error. But there is a point that afterwards, further enhancement of the feature may increase the classification error and it is the phenomenon called Peaking.

b) This phenomenon is one of the reasons that we want to use the number of optimal feature, assuming that we did not have this phenomenon at all, and the error process was based on the number of a bearish trend. For instance, even for this case, use of 40,000 features will not be natural and appropriate.

c) The discussion of computational complexity and storage volume: It is definitely clear that the high volume of data has a very high computational complexity, and we also have a lot of difficulties in storing them [20-24].

d) Approaching to the features that have the most impact on separation: Sometimes we encounter issues that having feature among of the sum of the primary attributes that have the greatest effect in separating two or more output classes is more important than output itself.

e) Specifically, we are faced with many issues in the field of medicine and, more specifically, in the forthcoming research with these issues, for example, a bunch of g1 to g1000 genes, and our goal is to know from this 1000 series of genes what kinds of features have the greatest impact on the classification of illness or health.

f) For example, if we can find, two or three genes that separates the disease and health classification, it will have a huge impact on the diagnosis of the disease on the basis of genetic symptoms.

g) All ofthese discussions highlight the importance of discussing the diminution of feature dimensions, and selecting features and extracting features as subcategories of them, is important issues in this area in which the goal is to achieve a set of features, for example, d future selection of p is the primary property where d <p. In such cases, if the result is right, for example, in news, we hear that the gene of the disease was discovered

h) But in this study, considering that our aim is to optimize the algorithm that creates the best diagnosis for brain P300 signals, generally we analyze this method for our research and try to find that the computational method and the optimization method by this method as well. And also how we can make the BCI system more accurate and faster.

EEG signals such as SMRs rhythms, and Slow Cortical Potential(SCPs(and, ERPs and Stages of Brain Response to events (SSVEPS), which are used in the design of many BCI systems, Signal P300, has been proven to be one of the most popular in the design of many BCI devices, such as lie detector, fingerprint, smart home and so on.

EEG signals use electrodes to record brain signals. The method of using the electrode on the scalp is a non-invasive method for recording, even though aggressive methods such as epidural and subdural and internal cortical recording are more precise, but description for surgical systems and implementation are much more difficult. The problem with this non-invasive method is that the recorded signal domain lies between 5 and 20 micro volts.

Given the accuracy of these signals, so, we focused on detecting the P300 signals. Wavelet transformation is also one of the popular methods which used to extract the P300 feature because it has a multi-dimensional property. In this paper, we use the discrete wavelet transform (DWT) method Figure 2.

In fact, to obtain more dependent information this method re-establishes from the original signal. In fact, this conversion method is used due to the creation of a new map of the signal from information that is not readily available and actually extracting the appropriate features [17].

Figure 2: DWT Decomposition.

To increase system speed and performance of Real-Time processes requires features with the least dimensions. The principle Component Analysis (PCA) is a well-known statistical method for extracting attributes and reducing dimensions. The PCA compresses the data by reducing the dimensions of the feature, while retaining the information.

In this paper, the KERNEL PCA method is used to reduce the dimensions of the feature. In this way, an optimal algorithm is presented compared to previous research. The KPCA method is a way allowing us to change in the PCA method to let us reducing the dimensionality in nonlinear classification, and, given the complexity of the data, we can provide a very high degree of accuracy.

To do the formulation, if we want to express, we use the following steps:

1. Form Kernel Matrix

2. EVD

3. Normalization

4. Extraction

SVM is a powerful machine learning algorithm for binary classification. SVM is a supervisory learning model that analyzes data and recognizes forms and patterns, and uses it to classify and regression. SVM also performs on a linearly well non-linear basis. The implementation of this method is non-linear classification through the kernel's methods. SVM separates the data set using optimal lines or super-optimized screens. The main idea of the SVM is to maximize the differences between the two classes [18-19].

Total data: Initially, we use 6 electrodes in order to receive EEG signals, in accordance with the standard 10-20 system that has 64 channels (Figure 3 & 4). This data is based on a sample rate of 240Hz from 6 channels (PZ, FZ, OZ, POZ, P1,P2). The aggregate of data consists of the data obtained from two issues A and B [11].

The matrix row and column have been intensified randomly and for a fixed duration of 100 milliseconds. After each matrix increase, there is an empty space for the next 75 seconds. This sequence repeats 15 times. Hence, the rows and columns are 15 times more intense, resulting in 15 * 12 = 180, resulting in 180 resonances for each symbol. We will design the implementation steps for the

Figure 3: Optimized EEG channels as per 10-20 system. The sum of the data used in this article is taken from the P300 split plot and stored in Competition III and the sum of data II in Article No. 18. In this experiment, threads were presented with a matrix 6.6, which includes all the letters and symbols that you see in the figure 4.

Figure 4: Symbol Matrix.

Proposed Algorithm as Follows

Preprocessing

In this section, the wavelet-based method is used to echo the line of EEG signals. The wavelet-based approach has the advantage that it is perfectly suited for non-constant signals. This process is used to reduce the effects of artefacts; otherwise small details will appear that propagate the signal.

Feature extraction

Each sample of the wavelet decomposition data is used to obtain wavelet coefficients. Instead of using the wavelet coefficients as features, a technique used to extract the characteristic is divided into five equal sets of wavelet coefficients obtained from each period (160 samples of the sample). Which is the least amount in each set. The minimum and maximum values of each obtained period are considered as (first feature vector) of 2 samples, along with the total coefficients obtained for each wavelet for each period and used as a second feature vector (2 * 5 * 6) and 160 * 6 samples are obtained.

Dimensional dimensions

In this paper, the KPCA method is used. Which is more accurate than the existing PCA method by using this method we have achieved more effective results than all existing methods.

Classification

As discussed earlier in this paper, the SVM Neural Network method is used for categorization, this method is chosen as a suitable method for classification in this research. The particular feature of this method is that the SVM neural network, in contrast to other neural networks, such as the MLP and RBF neural networks, and ... instead of reducing the modeling or classification error, consider operational targets as the function of the target. It calculates its optimal value. For example, when we want to divide the sum of data into two parts or groups, the type of work of the SVM network is that it expresses the risk of non-classification correctly in numerical numbers and counts the minimum value [14-16].

Result

Figure 5: P300.

Signal processing and calculations are done with MATLAB The time domain detection of the P300 Figure 5 and non-P300 Figure 6 signals is shown in two maps. The vertical axis represents the amplitude of the signal and the horizontal axis of the time in milliseconds. In the left side, the signal extracted from 0 to 666.67 shows after the stimulus on the map. You can see that the courier is clearly recognizable in comparison to the non-P300 counterpart of that figure to the right. The peak point of P300 is observed at 555.2 milliseconds after the real stimulus. Variety of Precision P300 Detection with Variety of

Figure 6: Non-P300.

Dimension features

When we use a large number of features, investigations have shown that the use of the PCA algorithm is highly accurate. It was also found that the decrease of the dimension of the vector has a significant impact on accuracy. In the form of change in accuracy, it is determined by the change in the characteristic vector Figure 7.

Figure 7: Variation of Accuracy with variation of dimension of reduced feature vector.

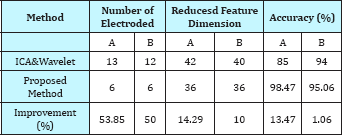

A large number of variations in the feature vector's dimensions have been made, which indicates that the highest and maximum accuracy was achieved by decreasing the feature vector for both subjective objects [17]. The proposed method uses the KPCA method, which, given that this method has much more precision in cases where it has many features, shows that the obtained results have a higher accuracy than the PCA method, which is shown in the following table in comparison with the method View Available Table 2 [2,4-7].

Table 2: Comparison with the best existing method.

Conclusion

A method for identifying p300 signals was presented, using a KPCA and wavelet characteristics provided by a non-linear SVM in the signal classification. The method makes it possible to use the received signals from test signals. Hence, there is a low amount of computational time that is a good choice for real-time applications. In addition, only 6 electrodes are used to receive input signals that are costly and costly to 64 complicated and expensive electrode neuro headsets. Although the p300 signals are highly dependent on a subjective or subject matter, the results showed that the proposed method can work for a variety of topics with high accuracy.

Given the limited availability of online data, this article includes two sets of data sets. In futuristic work, it works on methods that work on higher- dimensional data. Another thing that can be done is the implementation of the BCI's Speller hardware, which is highly accurate and can improve the data transfer rate.

References

- Sankar SA, Suparna SN, Venu SD, Praveen S (2014) Wavelet sub band entropy based feature extraction method for BCI. "International Conference on Information and Communication Technologies. Procedia Computer Science 46: 1476-1482.

- Upadhyay R, Manglick A, Reddy DK, Padhy PK, Kankar PK, et al. (2015) Channel optimization and nonlinear-feature-extraction-for Electroencephal -ogram signals classification. Computers and Electrical Engineering 45: 222-234.

- Bashashati A, Fatourechi M, Ward RK, Birch GE (2007) A survey of signal processing algorithmsin brain-computer interfaces based on electrical brain signals. J Neural Eng 4(2): R32-57.

- Ahmadi A, Masouleh MS, Janghorbani M, Manjili NYG, Sharaf AM, et al. (2015) Short term multi-objective hydrothermal scheduling. Electric Power Systems Research 121: 357-367.

- Oraizi H, Behbahani AK, Noghani MT, SharafimasoulehI MET (2013) Optimum design of travelling rectangular waveguide edge slot array with non-uniform spacing .Microwaves, Antennas & Propagation 7(7): 575-581.

- Azarbar A, Masouleh MS, Behbahani AK (2014) A new terahertz microstrip rectangular patch array antenna. International Journal of Electromagnetics and Applications 4(1): 25-29.

- Masouleh MS, Salehi F, Raeisi F, Saleh M, Brahman A, et al. (2016) Mixed- integer programm - ming of stochastic hydro self-scheduling problem in joint energy and reserves markets. Electric Power Components and Systems 44(7): 752-762.

- Masouleh MS, BehbahaniAK (2016) Optimum Design of the Array of Circumferential Slots on a Cylindrical Waveguide. AEU-International Journal of Electronics and Communications 70(5): 578-583.

- Azarbar A, Masouleh MS, Behbahani AK, Oraizi H (2012) Comparison of different designs of cylindrical printed quadrifilar Helix antennas. Computer and Communication Engineering (ICCCE), 2012 International Conference on.

- Masouleh MS, Behbahani AK, Oraizi H (2016) Erratum to Optimum design of the array of circumferential slots on a cylindrical waveguide. AEUE-International Journal of Electronics and Communications 7(70): 979.

- Bascil SM, Tesneli AY, Feyzullah T (2015) Multi-channel EEG signal feature extraction and pattern recognition on horizontal mental imagination task of 1-D cursor movement for brain computer interface. Australas Phys Eng Sci Med 38(2): 229-239.

- Gaoa WB, Guana JA, Gaoa J, Zhou D (2014) Multi-ganglion ANN based feature learning with application toP300-BCI signal classification. Biomedical Signal Processing and Control.

- Sumit S, Murthy BK (2015) Using Brain Computer Interface for Synthesized Speech Communication for the Physically Disabled. Procedia Computer Science 46: 292-298.

- Wijesinghe LP, Wickramasuriya DS, Pasqual AA (2014) Generalized Preprocessing and Feature Extraction Platform for Scalp EEG Signals on FPGA. IEEE conference on Biomedical enineeing and science.

- Islam M, Fraz MR, Zahid Z (2011) Optimizing Common Spatial Pattern and Feature Extraction Algorithm for Brain Computer Interface. 7th International Conference on Emerging Technologies (ICET), IEEE - Institute of Electrical and Electronics Engineers, Inc, Pakistan.

- Porkar P, Fakhimi E, Gheisari M, Haghshenas M (2011) a comparison with some sensor networks storages models. International Conference on Distributed Computing Engineering, Dubai, UAE, pp. 28-30.

- Gheisari M, Baloochi H, Gharghi M, Hadiyan V, Khajehyousefi M, et al. (2012) An Evaluation of Proposed Systems of Sensor Data's Storage in Total Data Parameter. International Geoinformatics Research and Development Journal, Canada.

- Aminaka D, Mori K, Matsui T, Makino S, Tomasz M, et al. (2013) Bone-conduction-based Brain Computer Interface Paradigm-EEG Signal Processing, Feature Extraction and Classification. International Conference on Signal-Image Technology & Internet-Based Systems.

- Ghayoumi M, Porkar P, Korayem MH (2006) Correlation Error Reduction of Image in Stereo Vision with Fuzzy Method and its Application on Cartesian Robot. Advanced In Artificial Intelligence, pp. 1271-1275.

- Porkar P, Setayeshi S (2005) Recognition of Irregular Patterns Using Statistical Methods Based on Hidden Markov Model. the second international conference on information & technology, pp. 24-26.

- Porkarrezaeiye P, Jahromi AF, Ghotbiravandi M, Dehaji MN, Shokri M, et al. (2015) A new algorithm for routing in Zigbee networks. International institute of engineers International Conference Data Mining, Civil and Mechanical Engineering (ICDMCME'2015) Feb. 1-2, 2015 Bali (Indonesia), pp. 15-18.

- Rezaeiye PP, Zarghan MB, Movassagh A, Fazli MS, Bazyari G, et al. (2014) Use HMM and KNN for classifying corneal data. eprint ar Xiv: 1401.7486.

- Rezaeiye PP, Rayani M, Ghiasi MI, Amini MR (2013) A New method for classifying corneal data. Indian Journal of science and technology 6(2): 3996-3998.

- Rezaeiye PP, Fazli M, Sharifzadeh M, Moghaddam H, Gheisari M, et al. (2012) Creating an ontology using protege: concepts and taxonomies in brief. Advance in Mathematical and computational methods in science and engineering (MACMESE'12), Sliema, Malta, pp. 7-9.

© 2018 Maryam Sajedi. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and build upon your work non-commercially.

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

a Creative Commons Attribution 4.0 International License. Based on a work at www.crimsonpublishers.com.

Best viewed in

.jpg)

Editorial Board Registrations

Editorial Board Registrations Submit your Article

Submit your Article Refer a Friend

Refer a Friend Advertise With Us

Advertise With Us

.jpg)

.jpg)

.bmp)

.jpg)

.png)

.jpg)

.png)

.png)

.png)